Hello everyone,

I would like to get started with selfhost with two projects.

Project A (for me): A NUC with Proxmox installed on it, two VMs including a Home Assistant and a NAS system that I haven’t chosen yet.

The only question I have with this project is:

- how to access the NAS and HA separately from the outside knowing that my access provider does not offer a static IP and that access to each VM must be differentiated from Proxmox.

Project B (for my uncle):

A NUC (with Proxmox or not, I don’t know yet, perhaps simpler for making backups), with HA but especially Frigate.

The goal is to use Google Coral to do recognition on 3 video surveillance cameras.

My questions are:

is Coral really useful with 3 cameras?do you need a Coral in USB or M.2 version?are there affordable NUCs with free M.2 slots?won’t proxmox add a layer of complexity with Coral/Frigate/a Zigbee dongle?

Thank you in advance for your help and sorry if my post is long.

PS: if you have recommendations for cameras that work with Frigate and are self-powered with solar panels, I’ll take them!

Edit : 8 april 2024

A little update. Thank you everyone for your super quick responses!

Regarding my uncle’s project and after big discussions, he is going to buy Reolink cameras and that’s it. This will be much simpler for maintenance than building a server.

Regarding my project: I chose a Beelink Mini S12 pro with an N100 processor (for its low consumption) with a 2.5 bay for an SSD for my Nextcloud.

I wondered if I wouldn’t take the opportunity to add pihole and that’s where new questions arise…

I see a lot of people installing Pihole on Docker, should I put it on Docker? Or create a VM?

Should Docker be installed on Proxmox or on a VM?

Is Proxmox really useful, shouldn’t I better install HA/Nextcloud/Pihole under Docker directly?

Should I use LXC or Docker?

I hear what you’re saying and honestly it’s not something I had thought about, so thanks for that.

For myself I should be good if your prediction comes true since I already have Home Assistant through my own domain using Cloudflare. I could theoretically move all my stuff to my own domain and Nginx, etc.

I like Tailscale because I don’t have to do all that. I’m new to Self Hosting (no I’m new to running multiple VMs) so finding something that just works with minimal effort is great for a noob. I wanna learn the things (networking), but I wanna learn other things (loads!) first.

Cloudflare and a Domain wasn’t as hard as DuckDNS and Nginx, but Tailscale was easier and cheaper than that in my adventures on Home Assistant. I’ve gone from hard to easy mode.

At some point a hobby has to cost money, I may be happy to pay for Tailscale if there’s more features. I’d like to replace SMB mounts with Tailnet mounts, but currently that’s not a thing to my knowledge.

Oh and I’m not really shouting from rooftops on a self hosted Lemmy server, it’s more like a quiet chat around a campfire telling a potential newcomer and easy way. It may cost in the future or they may make enough from Businesses that they keep a free tier, but currently it’s free and easy.

Ahh the shouting from the rooftops wasn’t aimed at you, but the general group of people in similar threads. Lots of people shill tailscale as it’s a great service for nothing but there needs to be a level of caution with it too.

I’m quite new to the self hosting game myself, but services like tailscale which have so much insight / reach into our networks are something that in the end, should be self hosted.

If your using SMB locally between VMs maybe try proxmox, https//clan.lol/ is something I’m looking into to replace Proxmox down the line. I share bind-mounts currently between multiple LXC from the host Proxmox OS, configuration is pretty easy, and there are lots of tutorials online for getting started.

Now then:

Are you sharing SMB mounts? I have my HDDs passed through to OMV and have considered just trying to pass them through to other VMs, but never tried because I don’t wanna break anything.

I have seen that you can share SMB to Proxmox and use them in Proxmox but don’t know if you can use them in VMs too.

As it is I really struggled with mounting smb for a couple of weeks and then had an “aha” moment last weekend, and have it all figured out now.

The Tailnet idea was so I can just mount everything to the Tailnet and stop worrying about whether it’s on this vlan or that. I was trying to set up an Openwrt container with VPN, which I could use for any container that needs a vpn, but then those containers couldn’t see the main network properly…

I’ve given up on that now and have my SMB mounts all set up, but feel like pass-through would give better network speeds for moving things around.

Yeah there is a workaround for using bind-mounts in Proxmox VMs: https://gist.github.com/Drallas/7e4a6f6f36610eeb0bbb5d011c8ca0be

If you wanted, and your drives are mounted to the Proxmox host (and not to a VM), try an LXC for the services you are running, if you require a VM then the above workaround would be recommended after backing up your data.

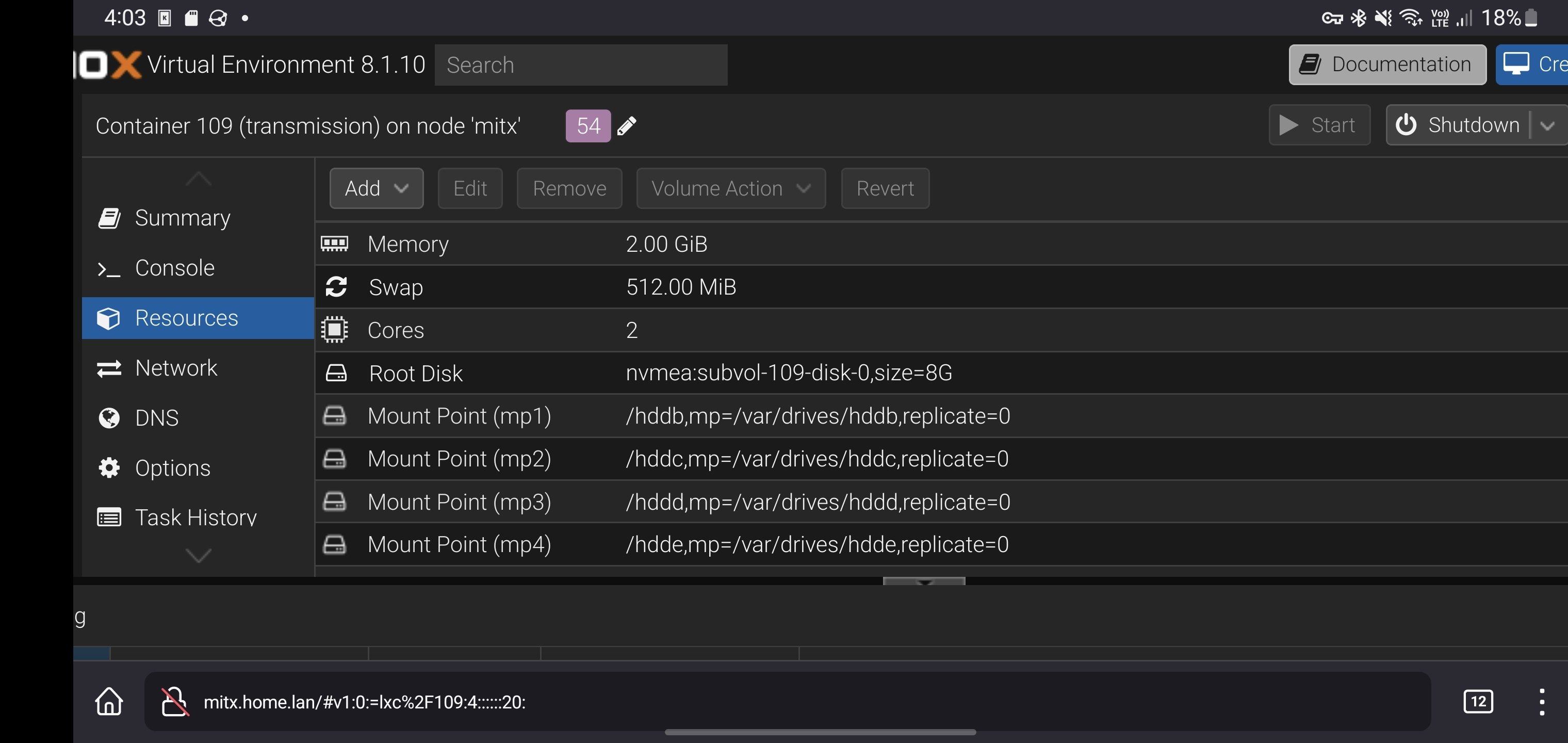

I’ve got my drives mounted in a container as shown here:

Basicboi config, but it’s quick and gets the job done.

I’d originally gone down the same route as you had with VMs and shares, but it’s was all too much after a while.

I’m almost rid of all my VMs, home assistant is currently the last package I’ve yet to migrate. Migrated my frigate to a docker container under nixos, tailscale exit node under nixos too while the vast majority of other packages are already in LXC.

This all sounds awesome. So eli5 I have all my drives mounted to Proxmox, then passed through to OMV in a VM.

I can just mount these same drives to containers no issues right now, and I can add them to VMs using your link?

I would like to get down to LXCs too, but I’ve found VMs so much easier to set up and use. I’ll try your way

I’ve not tested the method linked but yeah it would seem like it’s possible via this method.

My lone VM doesn’t need a connection to those drives so I’ve not had a point to.

You could probably run OMV in an LXC and skip the overheads of a VM entirely. LXC are containers, you can just edit the config files for the containers on the host Proxmox and pass drives right through.

Your containers will need to be privileged, you can also clone a container and make it privileged if you have something setup already as unprivileged!

I think you guys lots me haha!