- cross-posted to:

- becomeme@sh.itjust.works

- cross-posted to:

- becomeme@sh.itjust.works

If you’re worried about how AI will affect your job, the world of copywriters may offer a glimpse of the future.

Writer Benjamin Miller – not his real name – was thriving in early 2023. He led a team of more than 60 writers and editors, publishing blog posts and articles to promote a tech company that packages and resells data on everything from real estate to used cars. “It was really engaging work,” Miller says, a chance to flex his creativity and collaborate with experts on a variety of subjects. But one day, Miller’s manager told him about a new project. “They wanted to use AI to cut down on costs,” he says. (Miller signed a non-disclosure agreement, and asked the BBC to withhold his and the company’s name.)

A month later, the business introduced an automated system. Miller’s manager would plug a headline for an article into an online form, an AI model would generate an outline based on that title, and Miller would get an alert on his computer. Instead of coming up with their own ideas, his writers would create articles around those outlines, and Miller would do a final edit before the stories were published. Miller only had a few months to adapt before he got news of a second layer of automation. Going forward, ChatGPT would write the articles in their entirety, and most of his team was fired. The few people remaining were left with an even less creative task: editing ChatGPT’s subpar text to make it sound more human.

By 2024, the company laid off the rest of Miller’s team, and he was alone. “All of a sudden I was just doing everyone’s job,” Miller says. Every day, he’d open the AI-written documents to fix the robot’s formulaic mistakes, churning out the work that used to employ dozens of people.

Pretty dystopian article.

But this will continue, until oligarchs like Altman, Cook, Nadella etc. start getting put into difficult situations; ones that create very strong incentives for them to show humanity (or at least emulate it).

It’s never the managers who suffer first, is it?

It’s never the upper managent but they don’t actually do anything but landlord. Lower managers are being replaced by bots that police the bottom rung workers.

Anyhow when AI was very not working right at all the ownership class were eager to replace creative workers even then, so we we’ve known for over a year or two they’re gunning to end creative work and replace it with menial work.

I don’t know what the Mahsa Amini moment is going to be to spark the general worker uprising, but news about the conditions being right comes in every day.

Human condition… The water flows down

That’s not water

When your profession is to be a nice guy, and your protocols of communicating with others are not strictly regulated, replacement is not an easily solvable task.

Bu-ut I think that’ll eventually happen too. Or more precisely, things allowing a company to reduce workforce that much allow self-employed people to take a certain niche.

Unless for copyright and CP protection self-employment gets banned.

But these people who are getting paid to humanise AI are fantastic opportunists. Sure, it’s not a great job, but they have effectively recognised a new seat at a moment when we’re redefining the idea of productivity.

That’s fucking soul crushing.

We just fired you to hire this machine, however, if you’d like to stick around and edit for it, we will pay you 1/4 to 1/2 your current rate.

Jesus…fuck that guy.

The human serfs will have to proofread increasingly voluminous, numerous and complex output from ai systems. The product has become the master. Until the systems develop a sense of ‘truth’ beyond numerical statistics, generative ai is pretty much a toy.

Until the systems develop a sense of ‘truth’ beyond numerical statistics, generative ai is pretty much a toy.

I’ll start by saying I am pro-worker, pro-99%, pro-human.

Now, I must refute your assertion for specific domains (and specific working styles), e.g. translation (or a preference for editing over drafting/coding from a blank page). If money used to hit your bank account every two weeks because you translated or provided customer service for a company, and now that money doesn’t come in anymore, it wouldn’t feel too playful or like a toy is involved.

This is today, not “until” any future milestone.

Re-sharing some screenshots I took a month or so back, below.

November 2022: ChatGPT is released

April 2024 survey: 40% of translators have lost income to generative AI - The Guardian

Also of note from the podcast Hard Fork:

There’s a client you would fire… if copywriting jobs weren’t harder to come by these days as well.

Customer service impact, last October:

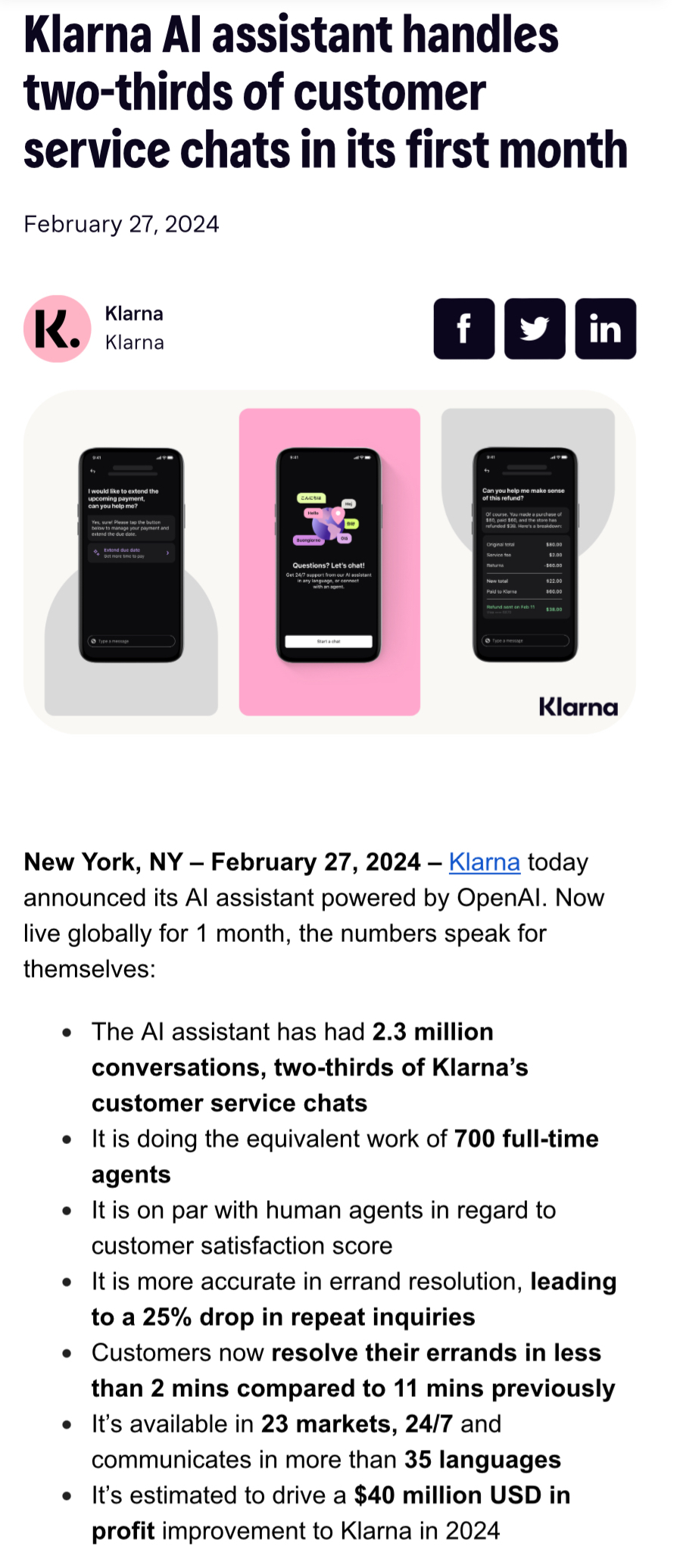

And this past February - potential 700 employee impact at a single company:

If you’re technical, the tech isn’t as interesting [yet]:

Overall, costs down, capabilities up (neat demos):

Hope everyone reading this keeps up their skillsets and fights for Universal Basic Income for the rest of humanity :)

Air Canada did that too. Only the lack of precision made offers to customers they weren’t prepared to honor.

Have to wonder how much Klarna invested in their tech, assuming they’re not big ole fibbers

Fibbing for investors and big tech. Name a more iconic duo?

I think retrieval augmentation and fine tunning are the biggest tools to the results more refined (or better reference a document as a source of truth). The other ironically is just regular deterministic programming.

If it’s just a “toy” then how is it able to have all this economic impact?

it’s an economic bubble, it will eventually burst but several grifters will walk out with tons of money while the rest of us will have to endure the impact

There were bubbles about things which were promising profits in the future but at the moment nobody knew how exactly. Like the dotcom bubble.

This one does bring profits now in its core too, but that’s a limited resource. It will be less and less useful the more poisoned with generated output the textual universe is. It’s a fundamental truth, thus I’m certain of it.

Due to that happening slowly, I’m not sure there’ll be a bubble bursting. Rather it’ll slowly become irrelevant.

While I agree with most points you make, I cannot see a machine that is, at a bare minimum, able to translate between arbitrary languages become irrelevant anytime in the foreseeable future.

OK, I agree, translation is useful and is fundamentally something it makes sense for.

Disagree about “arbitrary”, you need a huge enough dataset for every language, and it’s not going to be that much better than 90’s machine translators though.

It’s closer, but in practice still requires a human to check the whole text. Which raises the question, why use it at all instead of a machine translator with more modest requirements.

And also this may poison smaller languages with translation artifacts becoming norm. Calque is one thing, here one can expect stuff of the “medieval monks mixing up Armorica and Armenia” kind (I fucking hate those of Armenians still perpetuating that single known mistake), only better masqueraded.

While I am sure some job displacement is happening getting worked up over ai at this point when we had decades of blue collar offshoring that is being kinda reversed and replaced with white collar offshoring.

Or here is another one immigration at both levels.

Currently AIs biggest impact is providing cover for white collar offshoring.

Tech support and Customer Service tested the waters now they are full force trying professional services.

Bigger impact all around and not much discussion.

Well, while my views on economics are still libertarian, there’s one trait of very complex and interconnected systems, like market economies (as opposed to Soviet-style planned economy, say, which was still arcanely complex, but with fewer connections to track in analysis, even considering black markets, barter, unofficial negotiations etc), - it’s never clear how centralized it really is and it’s never clear what it’s going to become.

It’s funny how dystopian things from all extremes of that “political compass” thing come into reality. Turns out you don’t need to pick one, it can suck all ways. Matches well what one would expect from learning about world history, of course.

What I’m trying to say is that power is power, not matter what’s written in any laws. The only thing resembling a cure is keeping as much of it as possible distributed at all times. A few decades from now it may be found again, and then after some time forgotten again.

Whatever we got is deff not distributed in any sense of the term lol

That’s my point.

The popular idea that you can avoid giving power to the average person, giving it all to some bureaucracy, or big companies in some industry, or some institutions, and yelling “rule of law”, has come to its logical conclusion.

Where bureaucrats become sort of a mafia\aristocracy layer, big companies become oligopolies entangled with everything wrong, and institutions sell their decisions rather cheap.

Speculation. 100% speculation. A tool is precise. A toy is not. Guided ai, e.g. for circuit optimizing or fleet optimization is brilliant. Gai is not the same.

Evidently “precision” isn’t needed for the things the AI is being used for here.

Right. And neither is the investment it is attracting.

https://www.statista.com/topics/1108/toy-industry/#topicOverview

Because toy industry huge

Welcome to the new Industrial Revolution, where one person can do the work of many. Sure, mass produced

goodscontent aren’t as good as handmade artisanalproductswriting, but there’s a huge market for it.There really isn’t though. Very very few writers live off of writing alone.

A huge market means there’s lots of demand for the products. That doesn’t have to translate to lots of jobs for the people producing that product.

Is there demand tho? Once people catch shit is AI they seem to lose interest.

Can’t do much of resist anymore because shit sounds like bots half the time. Can’t even tell if it is bots tbh but can’t shake that feeling either. Lost all interest.

You don’t think there’s demand for news articles? The comment I’m responding to said there isn’t a huge market. That’s all I’m arguing against here, that there is a huge market. Whether AI can fulfill it a separate issue, one that we’ll see play out.

I don’t think people want to read air articles but I could be wrong tbh

Time will tell how general population reacts to it.

If they have an emotional reaction to the headline, (positive or negative) they click. Clicks make money.

Whether they click to read the fluff or click to share the headline doesn’t matter, a click is a click.

People still click tracking links?

The demand may not be by the end user but by businesses that need to fill stuff with filler text. Say you’re working for an automotive company and have to pull off a big email campaign you can use generative ai to help you type up a couple dozen emails, sure they’ll be crappy but you finished your work by the deadline so spending the company money for a service like that seems worth it

If most peoples jobs really are this mindless, then i don’t know what to say…

We got generation of educated people writing sloppy emails nobody need to be correct?

It’s not that they shouldn’t be correct it’s that people are expected to do the job of multiple people with limited budgets so they contract their work out to services or don’t take the time to double check stuff.

When I worked with the marketing people ot was a team of 3 that had to cover the US mexico and Canada, so they would have people on retainer to make things like art or text for a lot of the stuff, but I can see how they could use a service that used ai for those things

Look at what happened with Wacom, so I just see this kind of cost cutting is appealing to businesses that want to cut costs at the expense of quality

https://community.wacom.com/en-us/ai-art-marketing-response/

Again I’m not saying it’s good but there is demand for this stuff as shitty as it is

Huge market for generated text? Can you point out where that market is?

Absolutely, I maintained a bunch of small business websites in the 2010s and they all had blogs attached to them, they paid people to write generic articles about nutrition or whatever just so they’d get the SEO boost out of it from Google.

No one was reading these articles. No one cares about these articles. But posting them was very important for Google to rank you higher then your competitors.

That’s parasitism give or take, I meant - market of some real need.

Some people dig the holes some people fill them. Everyone greatful for the job.

If search engine fix the bullshit signifers the fake would dry up.

Yeah that’s what i am kinda shooing for. a lot of these jobs related to big tech ad eco system, it is very hard for me to care… but i also know they are coming for everyone too lol

a lot of these jobs related to big tech ad eco system

That’s military logic in some sense. That ecosystem makes many people dependent on it. The “I use it like everyone else, but I hate it and I’ll stop if it crashes” argument is wrong. The whole mass of that ecosystem is comprised of such people.

but i also know they are coming for everyone too lol

It’s an old story. Most of this thing’s optimization potential lies in a few niche areas. It can’t be put where you need precision or reliability. It can’t be put where a statistical guess about human decision is insufficient. And it can’t be put where you need a human because of, sorry, smiles and nice bodies being required.

It won’t be like the industrial revolution, because that optimized real production, very solid basic necessary jobs. This is optimizing billboard ads and newspaper boys, and people who make things we already try not to pay attention to.

It may make some other workplaces a bit more efficient. And I agree that oligopoly, every piece of base (territory and natural resources) being already owned and technological progress, combined, lead to a bleak future.

It’s more like publishers etc. are believing they can just produce more and more, while not realizing the market of such things are already oversaturated.

That’s like spammers trying to find luck in the market of Nigerian letters, TBH. Seems unlikely to lead to anything.

There’s a good reason why many call generative AI a scam.

Like he said at the end, nobody is reading the garbege.

I think is something g is written by AI the only way to read it is to make another AI to read it and summerize it. Then you still can decide to read the summary or not.

It’s probably replacing garbage written by humans that nobody was reading either.

So in this case, garbage content that nobody reads, AI is probably a good idea.

Yeah, the guy’s team was writing “articles and blog posts promoting a tech company”.

Letting an LLM mangle that isn’t exactly a huge loss.

Was this labor even needed in the first place?

It wasn’t. It’s the advertising\SEO industry, I think. Which is advertisers scamming clients who want to get clients, without clear feedback, and website owners scamming advertisers by sending their way occasional clicks.

Basically irritating garbage, 99% yielding fucks and adblocker installations, 1% yielding unconscious recognition of product and some monies going back. Apparently it’s profitable.

I guess the company was providing a kind of UBI? Not sure what will happen when all of those non-jobs disappear…

There’s nothing inherently wrong with doing quality assurance work, but I think the workers are being fooled into thinking it’s less valuable work than their old job. In fact, based on QA in other industries, I’d say these workers should be getting paid more. This is why unions are important, otherwise people just get fooled or bullied into accepting bad deals