Interesting take on comparability vs performance. I gotta imaging capturing user data and sending to a cloud collector is also a big culprit.

Massive amounts of telemetry data and nearly every app these days just being a web app just chews through your hardware. We use Teams at work and it’s just god awful. Hell, even steam is a problem. Even having your friends list open can cause a loss to your fps in some games.

“web app” gets used disparagingly frequently, but they can be done well. I use a couple PWAs; one for generating flight plans for simming (simbrief) and what I’m writing this on currently, wefwef. I think they’re fine in the right circumstances, and it’s harder for them to collect telemetry compared to a native app.

I think PWAs use your already installed browser whereas apps like teams use electron which bundles its own browser which a lot of people see as wasteful.

Given how prevalent web technologies are, I am honestly surprised there isn’t a push towards having one common Electron installation per version and having apps share that. Each app bundling its own Electron is just silly.

Teams eats up my macos memory something awful and it’s just sitting there… no one saying anything.

wtf I hate it

When I login to my work laptop in the morning, before even doing anything, Teams is the app using the most resources - typically 300MB-400MB, while doing nothing.

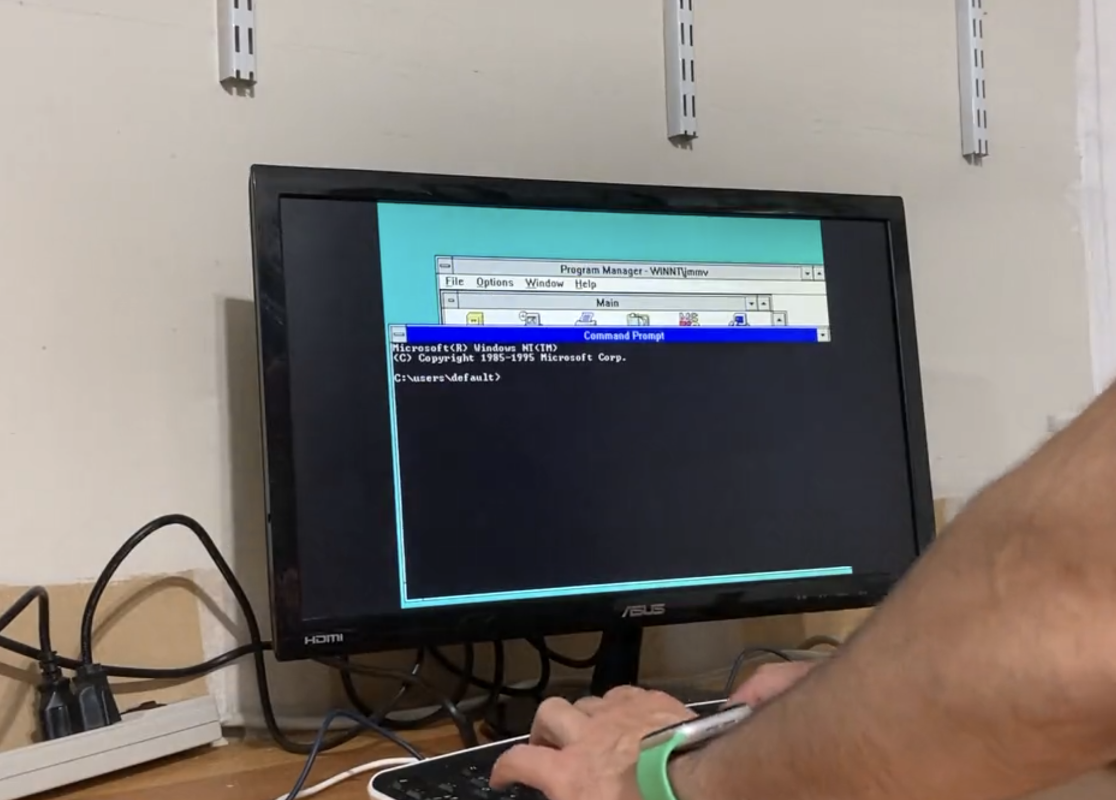

As an individual that has worked in IT for over a decade, yes. We keep making things incredibly fast, and for complex operations, that speed gain is realized, but for diverse, simple tasks, there’s ultimately, very little difference between something rather old and something rather new. The most significant uplift in real-world performance has been the SSD. Simply, eliminating, or nearly-eliminating the delay of spinning disks seek times is by far the best thing that’s happened for performance. Newer OSes and newer hardware go hand-in-hand, because with added hardware speed, comes software complexity, which is why a late-stage Windows 7 system typically will outperform an early stage Windows 10 machine; what I mean by “stage” here, is the point in time where the OS is considered “current” where early-stage is that it has recently become the currently newest OS, vs late stage, when it is soon to be overshadowed by something newer.

Microsoft made great performance gains over many years with windows since migrating to all NT-kernel OSes, around Windows XP, things got faster and faster, right up to around windows 8. Windows 7 was the last version, IMO, that was designed to be faster than it’s predecessors with more speed improvements than losses from the added complexity of the OS; from then on, we’ve been adding more complexity (ie, slowing things down) at a faster rate than we can optimize and speed them up. Vista was a huge leap forward in security, adding code signing, specifically for drivers and such; in that process, MS streamlined drivers to run in a more-native way, though kernel-mode drivers were more or less a thing of the past; this, however, caused a lot of issues as XP-era drivers wouldn’t work with Vista very well, if at all. Windows 7 further streamlined this, and as far as I know, there have been minimal if any improvements since.

In all of these cases, based on the XP base code (derived from NT4), it is still functionally slower than 9x, since all versions of 9x are written in x86 machine code rather than C, which is what NT is based on AFAIK. The migration to C code brought two things with it, the first, and most pertinent thing is slowdowns in the form of compiler optimizations, or rather, the lack of compiler optimizations, the second thing is portability, as the codebase is now C, the platform can now be recompiled fairly easily for different architectures, this was a long-term play by MS to ensure future compatibility with any architecture that may arise moving forward, all that MS would need to make Windows work on x (whatever arch is “next”), would be to write a C compiler for x, then begin compiling and debugging the code. MS has been ready and even produced several builds of windows for ARM and for MIPS specifically, and can likely migrate to RISC V anytime they want (if they haven’t already). This was the most significant slowdown from 9x to XP.

As time went on, security features started being integrated into the OS at the kernel level, everything from driver and application signing, to encryption (full-drive, aka bitlocker, and data-in-flight, aka AES or HTTPS), and more. The TPM requirement for Windows 11 is the next basic step in this march forward for security. They’re going this way because they have to. In order to be considered a viable OS for high-security applications, like government use, they must have security features that restrict access and ensure the security of data both in flight and at rest (on disk), the TPM is the next big step to doing that. The random seed in the TPM is far superior to any pseudo-random software seed that may exist, and the secured vault ensures that only authorized access is permitted to the security keys on the TPM, for things like full-disk encryption. The entire industry has been moving this direction just under the surface; and if you haven’t had an eye to watch for it, then it would be completely invisible to you. This describes most consumers and especially gamers, who just want fast games and reliable access to their computers.

Speaking of consumers, at the same time, MS, like almost all software/web/whatever companies, have been moving towards making you, and specifically, your data, into a product they can sell. this is the Google approach. As an entity, at least until fairly recently, Google didn’t make any money from their customers directly, instead they harvested their data, profiled all the users and sold advertisements based on that information, and they were INCREDIBLY successful at it and made plenty enough to keep them afloat. They’ve recently gotten into hardware and service (all the “as a service”) offerings which has allowed them to grow. Facebook and Amazon have both done the same (among others but there’s too many to list), and many other companies, including MS, are wondering “why not us too” because they see dollar signs down the road, as long as they can collect enough information about you to sell; So MS in their unique position, can basically cram down your throat all the data-harvesting malware they want, provided it never gets flagged as what it really is: MALWARE.

IMO, since Windows 7, they’ve been doing recon on all their users to try to obtain this information, which is part of the reason why everyone is being forced into using their Microsoft accounts for their PC logins on any non-pro and non-enterprise version of windows. This way they can tie the data they’re collecting about you, to you specifically. As of Windows 11, this has ramped up significantly. More and more malware to observe you and your behavior, and basically build an advertising profile for you that they can sell. They want more information all the time, and the process of collecting that information and pushing it back to MS to sell has become more and more invasive as time goes on; these processes take computing power away from you as the consumer to serve MS’s end goals, of selling you, their paying customer, to their advertisers. They will be paid on both sides (by you, for their product, and by their advertisers for your information). The worst part about it is that they haven’t really had any significant push-back on any of it.

If you go back to Windows 9x, or DOS/Windows 3.1 days, none of this was happening, so the performance you got, was the performance the hardware could deliver; now, all of your programs have to go through so many layers to actually hit the hardware to be executed, that it’s slowed things down to the point where it’s DRAMATICALLY NOTICABLE. So yeah, if you’re doing something intensive, like running a compression or encryption or benchmark or similar, you’ll get very close to the real performance of the system, but if you’re dynamically switching between apps, launching relatively small programs frequently, and generally multitasking, you’re going to be hit hard by this. Not only does the OS need to index your action to build your advertising profile, it also needs to run the antivirus to scan the files you’re accessing to make sure nobody else’s malware is going to run, and observe every action you take, to report back to the overlords about what you’re doing. In this always-on, always-connected world, you’re paying for them to spy on you pretty much all the time. It’s so DRAMATICALLY WORSE with windows 11, that it’s becoming apparent that this is happening - to everyone; as someone who has seen all of this growing from the shadows in IT for a decade, I’m entirely unsurprised. Simply upgrading your computer to a newer OS makes it slower, always. I’ve never wondered why, I’ve always known. There’s more moving parts they’re putting in the way. It’s not that the PC is slower, it just has SO MUCH MORE TO DO that it doesn’t move faster, and often, it’s noticeably slowed down by the processes.

Without jumping ship to Linux or some other FOSS, you’re basically SOL… Your phone is spying on you (whether android or iOS), your PCs are spying on you (whether Chromebook, Windows, or MAC), your “smart” home everything is spying on you, whether you have amazon alexa, google home, or Apple’s equivalent… Now, even your car is starting to spy on you. Regardless of what it is, if it’s more complex than a toaster, it’s probably reporting your information to someone. There’s very few if any software companies that are not doing this. Your choice then becomes a choice of equally bad options of who collects your data to sell it to whomever wants it, or go full tinfoil-hat and start expunging everything from your life that has a circuit more complicated than a 1980’s fridge in it, and going to live in the forest. I’m doomed to sell my data to someone; so far it’s mainly been MS and Google. My line of work doesn’t really allow me to go “off-grid” and survive in my field; not everyone is in my position. So make your choice. This isn’t going to get better anytime soon, and as far as I can see, it will never stop… so choose.

2 decades of IT here. I’m comfortable on linux but still irritated that there is no phone that won’t spy on me. I’m not irritated enough to go through the effort of jailbreaking my android. I own zero smart home products. Firefox and ublockorigin seems to block 99% of ads.

Not only are we sold as advertising profiles, but we are also getting profiled by our governments. The Chinese identification system is extremely advanced. Your favorite democracy is not far behind. The UK fines you automatically if you drive your car to the wrong place, etc.

Glad I’m going to be old and dead before the worst of it. Third world countries are still relatively untouched.

As much as I don’t appreciate the state in which they left the world for the rest of us, the boomer generation really did live in a golden era. During a technological boom, where technology was improving lives faster than it was doing anything else. The advent of modern refridgeration techniques, consumer vehicles, automatic telephone switching systems… even to the point of mobile communications, cellphones, and the internet; almost all of which was before privacy became a much larger issue like it is now. On top of that, they made more compared to the cost of goods sold, they had fair wages (thanks to unions), and working conditions, especially safety, was on a steady up-hill climb throughout their lifetime. On top of all that, their major assets, like homes and such were consistently appreciating in value throughout the years. A $60k home in like 1950, depending on location, now sells for 4x or even 10-20x the price today.

Millenials, Gen Z/Zoomers, we may live solidly in the information age, with access to a vast wealth of knowledge, almost all the time, constantly connected and constantly in touch, but it has come at a cost. We do not enjoy the same privacy and freedom that our parents did. Everything is posted online, whether we want it to be or not, private conversations get recorded and analysed automatically by our smart assistants either from our phones or the various google home/amazon alexa/whatever devices that litter our households, and at all times we’re being monitored in some way, shape, or form. What’s happening now, is that companies, governments and the ruling class are starting to make use of that information against us, to drive everyone further into disorder, futile infighting and dissention that’s only distracting us from their main plot of essentially robbing us of every dime, nickel and dollar they can. More for them, less for everyone else; but everyone is so blinded by idiotic notions like race and gender politics and treating eachother like hot garbage because they’re different, ancient ideas of racism and anti-gay propeganda that we’re either fighting for or against very passionately, as they slowly raise prices, lower wages, reduce how much you can buy with your hard earned dollar, steal our land, force us to rent from them, never own anything, provide everything as a service… All while trying to convince us that it’s in our best interest. The sad thing is, for most, it’s working. The whole “you can trust us” kind of mentality they’ve more or less pushed on us for decades is starting to crumble, and people are starting to ask WHY we should trust them. Then they just jazz hands look at this gay person, isn’t he such an evil sinner? or the alternative of, jazz hands look at these bigots oppressing these gays, aren’t they evil? (which can be substituted with people of color, or people of alternative lifestyles, or hell, even the amish… IDK, they’re not consumers like the rest of us are, let’s go after them, why not?)…

And we keep falling for it, every single fucking time. We’re ALL being oppressed. No matter what your personal beliefs are, WE’RE ALL IN THIS. Unless you’re part of the 1% or more accurately, the 0.1% of ultra rich fuckwads, you’re a target. you may be privileged, or well-off, from family wealth or whatever, but you’re still their target. Any wealth you have, they want. Their only real interest is in taking it from you, and giving you enough to distract you from the fact that it’s happening, so it can continue.

I love this but my lemmy client really needs to shorten long messages and have an (expand) button if we’re gonna have huge character limits like this because scrolling past this took an hour.

I’m sorry, I’m rather word-y. I like to fully explain myself so I am not misunderstood.

it makes for very long posts.

Amazing explanation throughout and super insightful. I don’t believe Lemmy has gilding type emphasis outside of the upvotes, but you deserve it.

Thanks, I’ve been observing the state of technology for a long time. I’m happy to share what I know.

It’s a basic tenant in networking that information is shared freely, as much as is feasible. The entire premise of networking is that we need to work together to accomplish a goal, across platform/vendor boundaries, across company boundaries, and across personal connections. I am specialized in networking, and we tend to work and share more freely than other areas of IT; not to say any are secretive per-say (aside from the software companies, those guys are generally dicks about IP, specifically with all the closed-source code that’s locked away, often without a good reason), it’s just that I haven’t had as open discussions about any form of tech with any group of technologists, as I have with networking folk.

The real sad part for me is the amount of e-waste this produces. Especially in devices like laptops.

A clean Linux distro can extend a laptops life by a decade. I have a laptop from the c2d era that I threw an ssd in and put Linux on. Perfectly serviceable as a basic machine.

It’s shocking how much faster Linux runs compared to a modern windows installation. I do worry however that as more and more programmers focus on web apps that we will eventually see the same problem on Linux as well. Developing desktop applications for Linux is already a pain and the ease of making modern web apps will amplify the problem. At least Linux won’t have all of the awful bloat that Microsoft runs in the background on windows these days, but I don’t think we will be able to escape from web app hell on Linux.

I do worry however that as more and more programmers focus on web apps that we will eventually see the same problem on Linux as well.

I wouldn’t worry about it. If it becomes a problem in Linux, there would have already been an implosion in Windows land and I am pretty sure people will start to notice and come up with better solutions. I honestly don’t understand why web apps have to bundle their own Electron builds instead of just sharing a common installation of specific versions of Electron.

Yup, had a 12 year old laptop that ran my work stuff just fine. i3 window manager uses like no resources. I only just replaced it this month because it did not run dwarf fortress well…

I have a love/hate relationship with desktop web apps on Linux. They are a great blessing in some ways because I get to run apps that just wouldn’t be available to me otherwise because Linux typically isn’t a priority for consumer-focused services. Often support exists as a convenient bonus because it came with the web app platform choice.

On the other hand, you get a web app, which looks nice (hopefully) but gobbles down your resources.

There should be a way to limit cpu usage and nem usage for a pwa on Linux. Definitely something I should look into.

How are you running pwas on Linux?

I got a decade old laptop running Linux that’s still kicking and outlived my MacBook. Surprisingly usable with only 4 gigs of ram thanks to Linux and a ssd upgrade. Without it would have tossed it long ago.

I don’t typically see computers getting replaced because they are “slow” but because of factors more related to support like apple dropping support for older models just because of reasons. Or companies doing routine system replacements because their fleet of computers are getting banged up and damaged and since they are XYZ years since their release and are no longer in production their costs and complexity with repair, maintenance time, etc… Are no longer worthwhile. Not to mention, the more insecure members of our species feel their self worth is dependent on how fancy and new their things are.

If you want to point fingers at why performance for operating systems and programs has declined over the years I would say it’s mostly in part due to security functions.

I doubt that the main source of software performance issues is due to security. I think the main problem is that software is not optimized anymore. Add this lib and that one.

Most devs I work with don’t even know how much CPU or memory their application needs to run.

I have an ancient early W7-era AMD dual core (bulldozer based? So it’s actually like 1.5c) that runs just peachy on Lubuntu LTS, 8gb of mismatched used Ram I got for free, and an ancient slow HDD.

I use it for D&D (Roll20 and Foundry) and MTG (Cockatrice).

I tested W10 with a ReadyBoost sd card and 2gb RAM and it barely worked.

Hasn’t this always been the case? Software development is a balance between efficiency of code execution and efficiency of code creation. 20 years ago people had to code directly in assembly to make games like Roller Coaster Tycoon, but today they can use C++ (or even more abstract systems like Unity)

We hit the point where hardware is fast enough for most users about 15 years ago, and ever since we’ve been using faster hardware to allow for lazier code creation (which is good, since it means we get more software per man-hour worked)

which is good, since it means we get more software per man-hour worked

In the same way that more slop is good for the hog trough

Human development is the development of labor saving practices (i.e development tools and methods) that liberate humans and labor to do other things. In this case “good software” is bound to that it 's efficient enough to run on the system and do it’s job and not slow down the whole system unjustifiably. Why on earth would anybody go full performance optimization autism mode, spending hours grinding down fractions of efficiency out of code, when one couldn’t even notice the difference between it and less optimized code running on the target system? One could spend all that time to do something actually productive for the project like a new feature or do something entirely else. Those earlier game and software devs would have killed for hardware that didn’t require everything to be custom built and optimized to a T. Not having to optimize everything to to a max doesn’t produce “slop”, it produces efficiency.

I agree with most of what you said, but the problem is not everyone has brand new hardware. And it sucks that people have to buy new computers just because software devs are lazy and their program uses 10x more memory than it should.

I think the end of Moore’s law will push more software efficiency since the devs won’t be able to count on free hardware gains. As compilers and other dev tools get better, i think the optimizations will become more automated.

Your examples are honestly terrible. C++ is a fast language, and it’s not easy to write fast x86 Assembly, especially faster than what the C++ compiler would spit out by itself. C++ doesn’t cause a slowdown by itself.

20 years ago people could code in Python and JavaScript, or about any high-level language popular today. Most programming languages are fairly old and some were definitely use for game development in the past (like C++), and game engines definitely date back way before 2003, or 1999 when RollerCoaster Tycoon was released.

RCT is an anomaly, not the rule. People who didn’t need to wouldn’t program in Assembly, unless they were crazy and wanted a challenge. You missed the mark by about a decade or so, even then we’re talking about consoles with extremely limited resources like the NES and not PC games like DOOM (1993), which was written in C.

As long as hardware performance keep increasing, developers would take advantage of it and keep sacrificing performance in exchange for better developer UX. If given a choice between their app using 10x memory vs their app taking 10x less time to develop, most devs would choose the latter, especially if their manager keep breathing behind their neck. The only time a developer would choose to make efficient, but longer to develop apps is usually where the a developer has final say about the project, which usually means small personal side projects, or projects in a company led by technical people who refuse to compromise (which is rare).

Once the hardware performance plateau, we’ll see resurgence of focus on improving application performance.

This is why I hate electron apps on Linux and Windows alike. Sadly, most apps are going electron, especially popular commercial apps. 😮💨

100%. An example I noticed was Balena Etcher and Rufus - they about the same functionality. Etcher is 151MB thanks to Electron, Rufus is 1.1MB.

I’m extremely thankful to

ddandgparted

Isn’t flutter running mostly native? Afaik most newly created apps are using flutter which does not use electron in it’s native versions, right?

Flutter is “native” if your definition of “native” apps are apps that compiled into native binaries. However, Flutter is not “native” if your definition of “native” apps includes apps that uses the native platform’s UI because afaik Flutter draw their own UI elements instead of using native UI elements, which is a deal breaker for some people.

The reverse can be said about React Native, where it’s not “native” because it uses javascript engine, yet it’s also “native” because it uses the native platform’s UI instead of rendering their own UI elements.

most newly created apps are using flutter

Do you have some examples? I’ve never heard of any desktop apps using Flutter, and trying to Google for examples only leads me to obscure ones I’ve never heard of.

They have a showcase showcase

From skimming over the list, the ones I spotted showing off their desktop apps were Rive, Superlist and Reflection.app.

Rive is actually a product that I use but I haven’t tried their new desktop app yet so o can’t speak to its startup performance.

Edit: also, I said new ones. I think it will take some time until we get a new super popular app along the lines of discord.

Flutter isn’t popular enough for that. I personally don’t get it though, because Flutter is awesome.

This is my understanding as well. Always thought that if I were to develop an app that I wanted to release far and wide, I’d choose Flutter.

The new installer of Ubuntu is being made with Flutter. I am not sure why since it doesn’t need to be cross platform, but they are working together with the Flutter team at Google.

Cool, at least some hope for low end computer users.

“We don’t need to optimize because modern hardware!”

-Lazy developers kicking Moore’s Law square in the junk

twas always thus, software development is gaseous in that it expands to take up all the area it is placed inside, this is both by the nature of software engineering taking the quickest route to solving any action, as well as by design of collusion between operating system manufacturers (read Microsoft and Apple) and the hardware platform manufacturers they support and promote. this has been happening since the dawn of personal computer systems, when leapfrogging processor, ram, hard drive, bus, and network eventually leads to hitherto improbably extravagant specs bogged down to uselessness. it’s the bane and very nature of the computing ecosphere itself.

The problem is also that developers have to add more and more fancy features that’s enabled by the new tech. Twenty years ago, a calculator app didn’t need to have nice animations on its buttons, but these days this is expected.

Can confirm 90 percent of modern software is dogshit. Thanks electron for making it worse.

JavaScript was a mistake.

I hadn’t considered the latency of abstraction due to non-native development. I just assumed modern apps are loaded with bloatware, made more sophisticated by design, and perhaps less elegantly programmed on average.

My laptop was running slow until I blocked windows 10 phoning home 3000 times per day. Looking at you browser.pipe.aria.microsoft.com

goddammit. I was watching this going “hey, my system is like that!” Check and yes, my 24 core Ryzen 5900X with 32GB ram with NVMe drive is painfully slow opening things like calculator, terminal etc. I am running Fedora 38 with KDE desktop… That the hell man

Single thread performance matter more when your metric is the speed of opening calculator, terminal and other apps. However, the 5900x has pretty good single thread performance already, roughly 1.5x faster than my old processor (i7-4790) and opening apps is pretty fast in my case (<1 second). Something is probably wrong with your setup. Perhaps you accidentally set your desktop power mode to “power saver” instead of “performance”?

Opening a calculator/terminal should be on the order of 1ms or even faster. 1s is absurd

Alas, I’m using gnome so ~1s calculator launch is the best I can get 😬

Yeah there does seem to be something wrong now that I can see it. Now just need to work out what it is…

deleted by creator

It’s not just applications. I recently “upgraded” two of my PCs from Windows 8.1 to to Windows 10. Ever since that having the mouse polling rate above like 125Hz and moving the cursor would result in frame drops in games.

This happened across two machines with different hardware, the only common denominator being the switch in Windows version. Tried a bunch of troubleshooting until I ultimately upgraded CPU + RAM due to RAM becoming faulty some time later on one of the machines. That finally resolved the issue.

So yeah, having to upgrade your hardware not because it’s showing its age but rather because the software running on it has become more inefficient is a real problem IMO.

Ah yes the mouse polling thing, the first time in ages I started up minecraft, every time I moved the mouse I dropped from 144fps to 1fps, and I came across the solution and man did it at first feel like bullcrap, but it worked for some reason

I came across the solution and man did it at first feel like bullcrap, but it worked for some reason

Wait, what was the solution? o_O

Please tell me, I’m begging you. I’ve been searching for like half a year without success. One of my PCs is still running “old” hardware (first gen highest tier Ryzen) and so I’m desperate to get this fixed ^^"

I legit had to put down the polling speed, I put it down to 1/10 of what it used to be and behold, no more lagg in specifically Minecraft

Ah yeah. I had to do the same. When you said “solution” I was hoping you meant you found a way to resolve the FPS drops while keeping polling at the original polling rate ^^

Isn’t this equivalent to https://en.wikipedia.org/wiki/Braess’s_paradox

and

Went down a bit of a rabbit hole here. Very interesting. Would love to see a Vsauce style video going into various real life examples of this

Thanks for sharing.

Cell phone batteries. Phones used to have shit batteries but they would last 3 days because the software running on it was minimal and the hardware was low power. Batteries have become way better in the last 20 years but phones don’t even last a day because if all the hardware and software running super demanding and inefficient programs.

The biggest contributor is actually screen size. Your phone screen is about 8x larger and 16x higher resolution than an old dumb phone.

Part of that is just us using our phones more. If I used my smartphone the way I would use an old flip phone, the battery would probably last a few days

Would love to see a vsauce style video

Heyy Vsauce, Michael here. But where ‘here’ is in modern computers, is very different from where ‘here’ was, or used to be in older PCs. But why is that?

I think this one is pretty good.

Most interesting wiki read in a long while! Thanks for posting this!

Also https://en.wikipedia.org/wiki/Parkinson's_law : The duration of public administration, bureaucracy and officialdom expands to fill its allotted time span, regardless of the amount of work to be done.

Fitting quotes:

- Data expands to fill the space available for storage.

- The demand upon a resource tends to expand to match the supply of the resource (If the price is zero). The reverse is not true.

Rabbits

Stop buying games that need 220gb of drive space, an Nvidia gtx 690000 and a 7263641677 core processor then. More than a 60gb download size means I pirate it unless it’s a really really damn good game. Games with no drm that can be run without a $20k computer, I buy.

Games are far from the worst examples of this. Largely games are still very high performance. Some lax policies on sizes are not the norm, most data is large because it’s just high detail.

The real losses are simple desktop apps being entire web engines.

Games are definitely not very well optimised. For one, most indie publishers are artists rather than software developers, which means that they do not have the technical expertise to properly program their applications, especially on the OpenGL/Vulkan/Direct3D side of things. Large video game corporations, in contrast, are indeed quite capable of reducing the hardware requirements and increasing the performance of their games, but they are often not willing, as became particularly evident recently with Jedi: Survivor, where a major public outcry was required for them to fix the game’s performance problems, which they have done quite competently.

Most indie 3D games are not programmed from scratch, working directly with Vulkan, OpenGL, etc. Instead, they’re using a licensed game engine like Unreal. A lot of AAA game houses didn’t like the game engine license fees eating into profit margins so they came up with their own engines that they maintain internally with varying levels of success.