As someone who spends time programming, I of course find myself in conversations with people who aren’t as familiar with it. It doesn’t happen all the time, but these discussions can lead to people coming up with some pretty wild misconceptions about what programming is and what programmers do.

- I’m sure many of you have had similar experiences. So, I thought it would be interesting to ask.

The notion that creating a half-decent application is quick and easy enough that I would be willing to transform their idea into reality for free.

I’m pretty sure that government software always blows because they think software can be written according to a fixed schedule and budget

It’s tempting to think it’s like building a house, and if you have the blueprints & wood, it’ll just be fast and easy. Everything will go on schedule

But no, in software, the “wood” is always shape shifting, the land you’re building on is shape shifting, some dude in Romania is tryna break in, and the blueprints forgot that you also need plumbing and electric lines

Well, that’s probably true for the most part but by far the reality is that it comes down to lowest bidder 9/10 times. Unrealistic budgets and unrealistic time frames with as cheap labor they can find gets you a large amount of government funded projects throughout all the years.

One of the most common problems of government or other big organisation software is that they don’t scale, either “not well” or “not at all”.

Some guy hacks up a demo that looks nice and seems to do what customer wants, but then it turns out a) that it only allows for (number of open ports on one machine) users at the same time, and b) it only works if everything runs on one machine. Or worse, one core.

It’s tempting to think it’s like building a house, and if you have the blueprints & wood, it’ll just be fast and easy. Everything will go on schedule

it never goes according to schedule eve if there is blueprint & wood

Building a house (or any construction project) is notoriously impossible to be on schedule and on budget too.

I have a hypothesis that a factor is that government needs to work for everyone.

A private company can be like “we only really support chrome”, but even people running ie6 at a tiny resolution need to renew their license.

I believe this is usually covered by the fact that you can do just about anything you need to do over mail. I once ran into a government site that only worked on Edge.

That’s absolutely true. What’s hard and what’s easy in programming is so completely foreign to non-programmers.

Wait, you can guess my password in under a week but you can’t figure out how to pack a knapsack?

I once had this and ended up paying for the meeting room cuz he was broke.

What’s worse is them insisting that you build it in Rust and Mongodb only.

That just because I’m a programmer that must mean I’m a master of anything technology related and can totally help out with their niche problems.

“Hey computer guy, how do I search for new channels on my receiver?”

“Hey computer guy, my excel spreadsheet is acting weird”

“My mobile data isn’t working. Fix this.”

My friend was a programmer and served in the army, people ordered him to go fix a sattelite. He said he has no idea how but they made him try anyways. It didn’t work and everyone was disappointed.

And everyone expects you to know how to make phone apps.

Like, I think I know what to google in order to start learning how.

If you know Java or Javascript you can easily build apps.

But like in every other software field, design is often more important.

He said he has no idea how but they made him try anyways.

Uh, I’ve been present when such a thing happened. Not in the military, though. Guy should install driver on a telephone system, despite not being a software guy (he was the guy running the wires). Result: About as bad as expected. The company then sent two specialists on Saturday/Sunday to re-install everything.

Ironically, most of those things are true, but only with effort. We are better than most people at solving technical problems, or even problems in general, because being a programmer requires the person to be good at research, reading documentation, creative problem solving, and following instructions. Apparently those aren’t traits that are common among average people, which is baffling to me.

Sometimes I’ll solve a computer problem for someone in an area that I know nothing about by just googling it. After telling them that all I had to do was google the problem and follow the instructions they’ll respond by saying that they wouldn’t know what to google.

Just being experienced at searching the web and having the basic vocabulary to express your problems can get you far in many situations, and a fair bit of people don’t have that.

My neighbour asked me to take a look at her refrigerator because it wasn’t working. I am a software developer.

I used to get a lot of people asking for help with their printer. No, just because I am a software developer doesn’t mean I know how why your printer isn’t working. But, yes, I can probably help you…

“Sometimes when somebody called it shows up up here but normally it covers the screen and I can see the name.” Like I have no idea how those businesses fix people’s phones, when they hear this kind of instructions. Makes me tear my hair out.

Don’t pretend you suck at these things. You know very well you are fucking equipped to fix this kind of thing when you work with programming. Unless you’re, like a web developer or something ofc

You are part of the problem

Nope

Why should I fix any of those peasant problems if I can order the lowly technician to fix my computer which I use to make crappy SQL queries? 🧐🧐🧐🧐🧐🧐🧐🧐🧐🧐🧐🧐🧐🧐🧐🧐🧐🧐🧐🧐🧐🧐🧐

Because you are a nice person to your relatives???

Thankfully dad knows how to troubleshoot stuff or go to the technician. I just tell him that I haven’t used windows In ages

The worst and most common misconception is that I can fix their Windows issues from a vague description they give me at a party.

Or some stupid Facebook “””issue”””

At least that’s an easy one, you just convince them to delete their account. \s

My favorite is “and there was some kind of error message.” There was? What did it say? Did it occur to you that an error message might help someone trying to diagnose your error?

What did it say?

I’ve had users who legitimately did not understand this question.

“What do you mean, what did it say? I clicked on it but it still didn’t work.”Then you set up an appointment to remote in, ask them to show you what they tried to do, and when the error message appears, they instantly close it and say “See, it still doesn’t work. What do we even pay you for?”

I’ve had remote sessions where this was repeated multiple times, even after telling them specifically not to close the message. It’s an instinctive reflex.Or it won’t happen when you’re watching, because then they’re thinking about what they’re doing and they don’t make the same unconscious mistake they did that brought up the error message. Then they get mad that “it never happens when you’re around. Why do you have to see the problem anyway? I described it to you.”

When that happens, I’m happy. Cause there is no error when the task is done right.

I mail them a quick step-by-step manual with what they just did while I watched.

When the error happens the next time I can tell them to RTFM and get back to me if that doesn’t solve the issue.

Isn’t the solution to send them this link? ;-)

https://distrowatch.com/dwres.php?resource=majorWon’t solve their problem, but they won’t be your friend anymore :)

This does solve the problem for you though

You Don’t Win Friends with

SaladLinux

No, it’s this link:

Windows bad. Linux good. No need for nuance, just follow the hivemind.

Do you have a few minutes to talk about our Lord and Saviour, Linus Torvalds?

Don’t you mean Linux Torvalds?

that hurt to type

Oh you have an issue on Linux? Just try a different distro

(this one hurts more because it technically usually works)

Lol! My mum still asks both me and my husband (“techy” jobs according to her) to solve all her problems with computers/printers/ the internet at large/ any app that doesn’t work… the list is endless. I take it as a statement of how proud she is of me that she would still ask us first, even if we haven’t succeeded in fixing a single issue since the time the problem was an old cartridge in the printer some 5-6 years ago.

My answer: “I don’t play Windows”.

I once had a friend who told me, that he finds it interesting that I think and write in 1s and 0s.

Confuse him with “I used to do that but I’m nonbinary now”

Oh, my gender, sexuality, and base are also quantum

Every Hollywood programmer

That might be true of VHDL / Verilog programmers I guess.

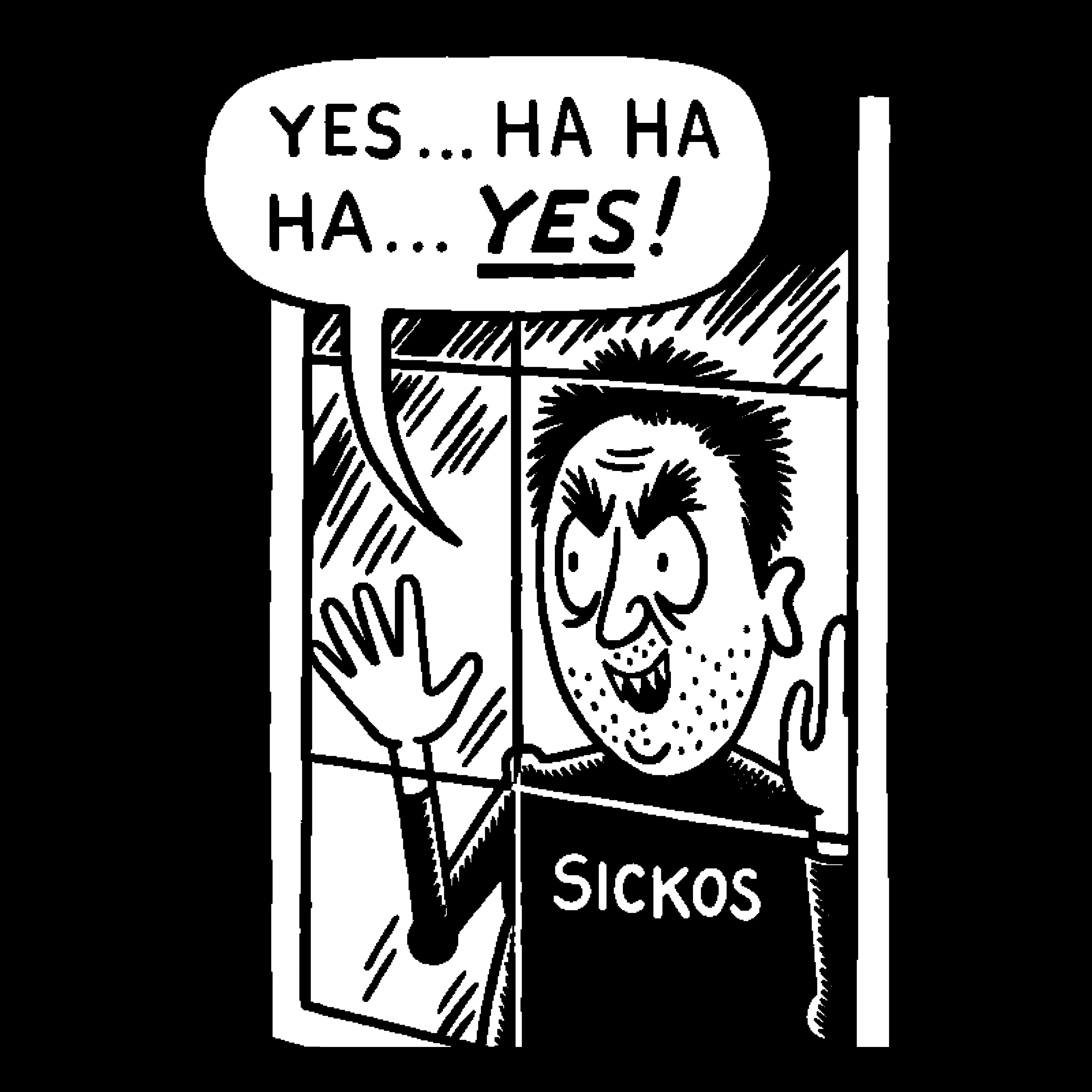

“Just”

That one word has done a fuck ton of lifting over my career.

“Can’t you just make it do this”

I can’t “just” do anything you fuck head! It takes time and lots of effort!

It’s a meme to say “can’t you just” at my workplace

“Would you kindly”…

Also “simple”. “It’s a simple feature.”

Simple features are often complex to make, and complex features are often way too simple to make.

I believe that it’s not for nothing that simplicity is considered more sophisticated. Many, many cycles of refinement.

It’s like, gotta be just one line of code, right?

I worked in a post office once. I once had a customer demand some package delivery option, if I remember correctly. He was adamant that it was “only a few lines of code”, that I was difficult for not obliging, and that anyone in the postal service should make code changes like that on the whims of customers. It felt like I could have more luck explaining “wallpaper” to the currents in the ocean…

explaining “wallpaper” to the currents in the ocean…

If this isn’t just a saying I haven’t heard of, I’m doing my best to make it a common place phrase, absolutely perfect in this context!

Thank you so kindly :) It’s not a saying, as far as I know.

I used to work on printer firmware; we were implementing a feature for a text box for if you scanned a certain number of pages on a collated, multi-page copy job. The text box told you it would print the pages it had stored to free up memory for more pages; after those pages had printed, another text box would come up asking if you wanted to keep scanning pages, or just finish the job.

The consensus was that it would be a relatively simple change; 3 months and 80 files changed — with somewhere in the ballpark of 10000-20000 lines changed, — proved that wrong.printer firmware is tens of thousands of lines long

I’m starting to understand why printers are so horrible

Just what was in the main repo (at least one other repo was used for the more secure parts of the code) was a little over 4 million lines. But yeah there’s a lot of complexity behind printers that I didn’t think about until I had worked on them. Of course that doesn’t mean they have to be terrible, it’s just easier to fall into without a good plan (spoiler alert: the specific firmware I was working in didn’t have a good plan)

Out of curiosity do you have any good examples of this hidden complexity? I’ve always kinda wondered how printers work behind the scenes.

A lot of the complexity came from around various scenarios you could be in; my goto whenever people would ask me “Why can’t someone just make printer firmware simple?” is that you could, if you only wanted to copy in one size with one paper type, no margin changes, and never do anything else.

There’s just so many different control paths that need to act differently; many of the bugs I worked on involved scaling and margins. Trying to make sure the image ended up in a proper form before it made it to hardware (which as more complexity, ran on a different processor and OS than the backend so that it could run realtime) when dealing with different input types (flatbed scanner vs a document feeder, which could be a everyday size, or like 3 feet long) different paper sizes, scaling, and output paper. I mainly worked on the copy pipeline, but that also was very complex, involving up to, something like, 7 different pieces in the pipe to transform the image.

Each piece in the pipeline was decently complex, with a few having their own team dedicated to them. In theory, any piece that wasn’t an image provider or consumer could go in any order — although in practice that didn’t happen — so it had to be designed around different types of image containers that could come in.

All of that was also working alongside the job framework, which communicated with the hardware, and made sure what state jobs were in, when different pieces of the pipeline could be available to different jobs, locking out jobs when someone is using the UI in certain states so that they don’t think what’s printing is their job, and handling jobs through any of other interface (like network or web.)

That’s the big stuff that I touched; but there was also localization; the UI and web interfaces as a whole; the more OS side of the printer like logging in, networking, or configuration; and internal pages — any page that the printer generates itself, like a report or test page. I’m sure there’s a lot more than that, and this is just what I’m aware of.

Anything can fit in one line of you are brave enough.

Everything is one line of code in a turing machine.

I’m pretty sure that’s a breach of style guide in at least three different languages

but probably not C++

Removed by mod

I like to say:

We have a half finished skyscraper, and you’re asking me to Just add a new basement between the second and third floor. Do you see how that might be difficult? If we want to do it, we have to tear down the entire building floor by floor, then build up again from the second floor. Are you prepared to spend the money and push back the release date for that new feature?

“Just” is a keyword that I’m going to triple my estimates. “Just” signifies the product owner has no idea what they are requesting, and it always becomes a dance of explaining why they are wrong.

I would have written that comment if you hadn’t already done it.

I don’t know exactly why people think that we can “just” do whatever they ask for.

Maybe it has something to do with how invisible software is to the tech-illiterate person but I’m not convinced. I’m sure there are other professions that get similar treatment.

I know you built the bridge to support 40 ton vehicles, but I think if we just add a beam across the middle here, we should be able to get 200 tons across this no problem? Seems simple, please have it done by Monday!

I get that from our product owners a lot, and I usually say “yes!”, followed by an explanation of how much time it will take and why it’s not the path we want to take. People respond well to you agreeing with them, and then explaining why it’s probably not the best approach.

A lot people compleatly overrate the amount of math required. Like its probably a week since I used a aritmetic operator.

Sometimes when people see me struggle with a bit of mental maths or use a calculator for something that is usually easy to do mentally, they remark “aren’t you a programmer?”

I always respond with “I tell computers how to do maths, I don’t do the maths”

Which leads to the other old saying, “computers do what you tell them to do, not what you want them to do”.

As long as you don’t let it turn around and let the computer dictate how you think.

I think it was Dijkstra that complained in one of his essays about naming uni departments “Computer Science” rather than “Comput_ing_ Science”. He said it’s a symptom of a dangerous slope where we build our work as programmers around specific computer features or even specific computers instead of using them as tools that can enable our mind to ask and verify more and more interesting questions.

The scholastic discipline deserves that kind of nuance and Dijkstra was one of the greatest.

The practical discipline requires you build your work around specific computers. Much of the hard earned domain knowledge I’ve earned as a staff software engineer would be useless if I changed the specific computer it’s built around - Android OS. An android phone has very specific APIs, code patterns and requirements. Being ARM even it’s underlying architecture is fundamentally different from the majority of computers (for now. We’ll see how much the M1 arm style arch becomes the standard for anyone other than Mac).

If you took a web dev with 10YOE and dropped them into my Android code base and said “ok, write” they should get the structure and basics but I would expect them to make mistakes common to a beginner in Android, just as if I was stuck in a web dev environment and told to write I would make mistakes common to a junior web dev.

It’s all very well and good to learn the core of CS: the structures used and why they work. Classic algorithms and when they’re appropriate. Big O and algorithmic complexity.

But work in the practical field will always require domain knowledge around specific computer features or even specific computers.

I think Dijkstra’s point was specifically about uni programs. A CS curriculum is supposed to make you train your mind for the theory of computation not for using specific computers (or specific programming languages).

Later during your career you will of course inevitably get bogged down into specific platforms, as you’ve rightly noted. And that’s normal because CS needs practical applications, we can’t all do research and “pure” science.

But I think it’s still important to keep it in mind even when you’re 10 or 20 or 30 years into your career and deeply entrenched into this and that technology. You have to always think “what am I doing this for” and “where is this piece of tech going”, because IT keeps changing and entire sections of it get discarded periodically and if you don’t ask those questions you risk getting caught in a dead-end.

He has a rant where he’s calling software engineers basically idiots who don’t know what they’re doing, saying the need for unit tests is a proof of failure. The rest of the rant is just as nonsensical, basically waving away all problems as trivial exercises left to the mentally challenged practitioner.

I have not read anything from/about him besides this piece, but he reeks of that all too common, insufferable, academic condescendance.

He does have a point about the theoretical aspect being often overlooked, but I generally don’t think his opinion on education is worth more than anyone else’s.

Article in question: https://www.cs.utexas.edu/~EWD/transcriptions/EWD10xx/EWD1036.html

Sounds about right for an academic computer scientist, they are usually terrible software engineers.

At least that’s what I saw from the terrible coding practices my brother learned during his CS degree (and what I’ve seen from basically every other recent CS grad entering the workforce that didn’t do extensive side projects and self teaching) that I had to spend years unlearning him afterwards when we worked together on a startup idea writing lots of code.

At the same time, I find it amazing how many programmers never make the cognitive jump from the “playing with legos” mental model to “software is math”.

They’re both useful, but to never understand the latter is a bit worrying. It’s not about using math, it’s about thinking about code and data in terms of mapping arbitrary data domains. It’s a much more powerful abstraction than the legos and enables you to do a lot more with it.

For anybody who finds themselves in this situation I recommend an absolute classic: Defmacro’s “The nature of Lisp”. You don’t have to make it through the whole thing and you don’t have to know Lisp, hopefully it will click before the end.

the “playing with legos” mental model

??

Function/class/variables are bricks, you stack those bricks together and you are a programmer.

I just hired a team to work on a bunch of Power platform stuff, and this “low/no-code” SaaS platform paradigm has made the mentality almost literal.

I think I misunderstood lemmyvore a bit, reading some criticism into the Lego metaphor that might not be there.

To me, “playing with bricks” is exactly how I want a lot of my coding to look. It means you can design and implement the bricks, connectors and overall architecture, and end up with something that makes sense. If running with the metaphor, that ain’t bad, in a world full of random bullshit cobbled together with broken bricks, chewing gum and exposed electrical wire.

If the whole set is wonky, or people start eating the bricks instead, I suppose there’s bigger worries.

(Definitely agree on “low code” being one of those worries, though - turns into “please, Jesus Christ, just let me write the actual code instead” remarkably often. I’m a BizTalk survivor and I’m not even sure that was the worst.

My take was that they’re talking more about a script kiddy mindset?

I love designing good software architecture, and like you said, my object diagrams should be simple and clear to implement, and work as long as they’re implemented correctly.

But you still need knowledge of what’s going on inside those objects to design the architecture in the first place. Each of those bricks is custom made by us to suit the needs of the current project, and the way they come together needs to make sense mathematically to avoid performance pitfalls.

Removed by mod

Read that knowing nothing of lisp before and nothing clicked tbh.

When talking about tools that simplify writing boilerplate, it only makes sense to me to call them code generatiors if they generate code for another language. Within a single language a tool that simplifies complex tasks is just a library or could be implemented as a library. I don’t see the point with programmers not utilizing ‘code generation’ due to it requiring external tools. They say that if such tools existed in the language natively:

we could save tremendous amounts of time by creating simple bits of code that do mundane code generation for us!

If code is to be reused you can just put it in a function, and doing that doesn’t take more effort than putting it in a code generation thingy. They preach how the xml script (and lisp I guess) lets you introduce new operators and change the syntax tree to make things easier, but don’t acknowledge that functions, operator overriding etc accomplish the same thing only with different syntax, then go on to say this:

We can add packages, classes, methods, but we cannot extend Java to make addition of new operators possible. Yet we can do it to our heart’s content in XML - its syntax tree isn’t restricted by anything except our interpreter!

What difference does it make that the syntax tree changes depending on your code vs the call stack changes depending on your code? Of course if you define an operator (apparently also called a function in lisp) somewhere else it’ll look better than doing each step one by one in the java example. Treating functions as keywords feels like a completely arbitrary decision. Honestly they could claim lisp has no keywords/operators and it would be more believable. If there is to be a syntax tree, the parenthesis seem to be a better choice for what changes it than the functions that just determine what happens at each step like any other function. And even going by their definition, I like having a syntax that does a limited number of things in a more visually distinct way more than a syntax does limitless things all in the same monotonous way.

Lisp comes with a very compact set of built in functions - the necessary minimum. The rest of the language is implemented as a standard library in Lisp itself.

Isn’t that how every programming language works? It feels unfair to raise this as an advantage against a markup language.

Data being code and code being data sounded like it was leading to something interesting until it was revealed that functions are a seperate type and that you need to mark non-function lists with an operator for them to not get interpreted as functions. Apart from the visual similarity in how it’s written due to the syntax limitations of the language, data doesn’t seem any more code in lisp than evaluating strings in python. If the data is valid code it’ll work, otherwise it won’t.

The only compelling part was where the same compiler for the code is used to parse incoming data and perform operations on it, but even that doesn’t feel like a game changer unless you’re forbidden from using libraries for parsing.

Finally I’m not sure how the article relates to code being math neither. It just felt like inventing new words to call existing things and insisting that they’re different. Or maybe I just didn’t get it at all. Sorry if this was uncalled for. It’s just that I had expected more after being promised enlightenment by the article

This is a person that appears to actually think XML is great, so I wouldn’t expect them to have valid opinions on anything really lol

On the other hand in certain applications you can replace a significant amount of programming ability with a good undertstanding of vector maths.

We must do different sorts of programming…

There’s a wide variety of types of programming. It’s nice that the core concepts can carry across between the disparate branches.

If I’m doing a particular custom view I’ll end up using

sin cos tanfor some basic trig but that’s about as complex as any mobile CRUD app gets.I’m sure there are some math heavy mobile apps but they’re the exception that proves the rule.

You should probably use matrices rather than trig for view transformations. (If your platform supports it and has a decent set of matrix helper functions.) It’ll be easier to code and more performant in most cases.

I mean I’m not sure how to use matrices to draw the path of 5 out of 6 sides of a hexagon given a specific center point but there are some surprisingly basic shapes that don’t exist in Android view libraries.

I’ll also note that this was years ago before android had all this nice composable view architecture.

Hah, yeah a hexagon is a weird case. In my experience, devs talking about “math in a custom view” has always meant simply “I want to render some arbitrary stuff in its own coordinate system.” Sorry my assumption was too far. 😉

Yeah it was a weird ask to be fair.

Thankfully android lets you calculate those views separately from the draw calls so all that math was contained to measurement calls rather than calculated on draw.

Tbf, that’s probably because most CS majors at T20 schools get a math minor as well because of the obscene amount of math they have to take.

Negl I absolutely did this when I was first getting into it; especially with langs where you actually have to import something to access “higher-level” math functions. All of my review materials have me making arithmetic programs, but none of it goes over a level of like. 9th grade math, tops. (Unless you’re fucking with satellites or lab data, but… I don’t do that.)

They can’t possibly judge what is trivial to achieve and what’s a serious, very hard problem.

As always, there is an XKCD for that.

The example given in the comic has moved from one category to the other. Determining whether an image contains a bird is a fairly simple “two hour” task now.

Plot twist: The woman in the comic is Fei-Fei Li, she got the research team and five years and succeeded 🤯

Well that’s after thousands of people and 100s of millions in money

Of course, but I still find it remarkable that the task that was picked as an example for something extremely difficult is now trivially easy just a few years later

That is a pretty hard thing to do, to be fair. And the list of things that are easy sometimes makes big jumps forward and the effect of details on the final effort can be massive.

You’re right! Even for programmers.

- You’re a hacker (only if you count the shit I program as hacks, being hack jobs)

- You can fix printers

- You’re some sort of super sherlock for guessing the reason behind problems (they’ll tell you “my computer is giving me an error”, fail to provide further details and fume at your inability to guess what’s wrong when they fail to replicate)

- If it’s on the screen, it’s production ready

If it’s on the screen, it’s production ready

“I gave you a PNG, why can’t you just make it work?”

I actually get that somewhat often, but for 3D printing. People think a photo of a 3D model is “the model”

Dude, I would just 2d print the png they sent and give them the piece of paper.

If they complained, I would say: “I literally printed the thing you told me to print.”

I’ve had questions like your 3rd bullet point in relation to why somebody’s friend is having trouble with connecting a headset to a TV.

No idea. I don’t know what kind of headset or what kind of TV. They are all different Grandma.

People think I can hack anything ever created, from some niche 90s CD software to online services

A friend asked me to atempt data recovery on some photos which ‘vanished’ off an USB stick.

Plugged it in, checked for potential hidden trash folders, then called it a day. Firstly I havenever done data forensic and secondly: No backup? No mercy.

There are tools for that fyi

Well, here’s the important part:

I have never done data forensic

So yeah, I didn’t know that at the time. Anyway: Which tools are you talking about in particular?

Windows File Recovery, WinfrGUI, Easeus, Aomei, DiskGenius, etc.

Someone else already named some tools, so I won’t repeat. But the reason this works is that even once you clear out those trash files, the OS usually only removes the pointer to where the data lives on the disk, and the disk space itself isn’t overwritten until it’s needed to save another file. This is why these tools have a much higher chance of success sooner after file deletion.

That there’s something inherently special about me that makes me able to program…

… Yes…patience and interest.

The things that make me a good programmer:

- I read error messages

- I put those errors in Google

- I read the results that come up

Even among my peers, that gives me a leg up apparently.

Don’t underestimate what having the necessary intuitions do engage with mathematics does for you. A significant portion of the population is incapable of that, mostly because we have a very poor way of teaching it as a subject.

Funny you should say that as I was thinking that the idea that math has anything to do with programming is the biggest misconprehension I encounter.

Hey we did all sort of crazy shit with linear algebra, vectors matrices and shit in college programmlng. Now I sometimes do some basic arithmetic in work life. E.g:

n = n + 1

Sometimes, very rarely, I tell my squad that today’s our unlucky day and we’re actually going to have to do math to the problem.

This is very fair. Math has always come fairly easily to me. So math intuition plays a part in my interest and ability to learn to program.

I think most people, even smart people, assume they couldn’t do it though because I’m some kind of genius, which only a few programmers actually are.

Agreed. Few geniuses, it’s mostly driven people with slightly above average intelligence and a good bit of opportunity.

I can’t do math for shit and I failed formal logic in uni. I’m not built for math. I just… Don’t care and can’t make myself care. I’ve taught myself python over the past year and amd have become fairly comfortable with bash. Which has weirdly helped me with python?

Anyway I’m not very good at either yet. And there are huge gaps in my knowledge. But I’m learning every day.

I’ve done it on my own, and dove right into the fucking deep end with it which is probably the hardest way. But if I can do it then anyone can. You just need to want it. Why do I want it? I have no idea. If go crazy doing it for a living.

Learning more languages always helps understanding ime. I’d recommend learning C.

Learning python isn’t jumping in at the deep end. Learning assembly or C would be the deep end. Also programming has little to do with maths anymore, and the maths you use for programming isn’t the kind most people are taught in school.

You’re misunderstanding my use of the phrase.

I’m using it in the context or immersing in something you have no understanding of. I just dove right into and skipped most of the intro type stuff.

You’re using the phrase to talk about relative complexity / difficulty not how I’ve usually heard it used but it makes sense.

Like. Most people learning python start with hello world. I spent too many hours learning to own hot encode a 500gb dataset of reddit porn and tweak stylegan 3 a bit to train it on porn. None of which is remarkable objectively but there were a lot of very basic things I needed to learn to finish the task. That’s what I mean by jumping in the deep end - throwing yourself into something you are probably poorly or il equipped for and just figuring it out as you go.

There is a deep end of coding complexity of course, but, different kind of deep end.

I met a friend of a friend recently and they asked what I did and I told them I’m a computer systems engineer and they were like “oh you must be smart” and I was like “I like to think that I’m good at what I do, but trust me. I am not smart”

Like stoly said above, I think programmers are probably slightly above average intelligence overall, so don’t sell yourself short there. But yeah. We’re not geniuses

i don’t, l’ve met far too many

That IT subject matter like cybersecurity and admin work is exactly the same as coding,

At least my dad was the one who bore the brunt of that mistake, and now I have a shiny master’s degree to show to all the recruiters that still don’t give my resume a second glance!

“But why? It both has to do with computers!” - literally a project manager at my current software project.

That IT subject matter like cybersecurity and admin work is exactly the same as coding,

I think this is the root cause of the absolute mess that is produced when the wrong people are in charge. I call it the “nerd equivalency” problem, the idea that you can just hire what are effectively random people with “IT” or “computer” in their background and get good results.

From car software to government websites to IoT, there are too many people with often very good ideas, but with only money and authority, not the awareness that it takes a collection of specialists working in collaboration to actually do things right. They are further hampered by their own background in that “doing it right” is measurable only by some combination of quarterly financial results and the money flowing into their own pockets.

Doesn’t help that most software devs don’t have the social IQ to feel comfortable saying “no” when they’re offered something that they don’t feel comfortable with and just try making it work by learning it on the fly, even learning a company enforced format of code layout is often left for new hires to just figure out. If it weren’t for how notepad++ has an option to replace tabs with spaces, I’d have screwed my internship over when I figured out that IBM coding (at least at the time) requires all spaces instead of any tabs after a stern talking to from my supervisor!

Idk I’m not sure I’d trust any dev who doesn’t consider cyber security in their coding. So much development is centered around security whether that’s auth or input sanitization or SQL query parameterization…

If you’re working on an internal only application with no Internet connectivity then maybe you can ignore cybersec. But only maybe.

No one’s saying to ignore it.

If I own and run a sandwich shop, I don’t need to be on the farm picking and processing the wheat to make the flour that goes into my bread. I could do that, but then I’d be a farmer, a miller, and a sandwich maker. All I need to know is that I have good quality flour or bread so that I can make damn good sandwiches.

I’m confused where cybersec sits in your sandwich analogy. If every time you sold a sandwich someone could use it to steal all the money in your business you’d probably need to know how to prevent reverse sandwich cashouts.

I’m not talking about advanced, domain specific cybersec. I don’t expect every developer to have the sum total knowledge of crowd strike… But in a business environment I don’t see how a developer can not consider cybersec in the code they write. Maybe in an org that is so compartmentalized down that you only own a single feature?

In a few words, I’m reiterating the point that a professional software developer =/= professional cyber security expert. Yes, I know that I should, for example, implement auth; but I’m not writing the auth process. I’m just gonna use a library.

Well, at least I know from this that some folks give my résumé the first look! Not exactly more than cold comfort though, especially when I’ve already built a career in business management after a family friend gave me a leg up when the “I don’t want to do my job!” HR sorting bots kept discarding my resume for not having gone to Harvard for either my Bachelor’s or Master’s.

My part of the hiring cycle they’ve already gotten past the pipeline / bots. I’m there to do architecture and design questions 😉

But I do read every resume.

… You know not all development is Internet connected right? I’m in embedded, so maybe it’s a bit of a siloed perspective, but most of our programs aren’t exposed to any realistic attack surfaces. Even with IoT stuff, it’s not like you need to harden your motor drivers or sensor drivers. The parts that are exposed to the network or other surfaces do need to be hardened, but I’d say 90+% of the people I’ve worked with have never had to worry about that.

Caveat on my own example, motor drivers should not allow self damaging behavior, but that’s more of setting API or internal limits as a normal part of software design to protect from mistakes, not attacks.

It’s fair to point out that not all development is Internet connected, but ~58% of developers work in web dev.

5% in desktop apps

3% in mobile

2.4% in embedded

And then of the remaining I’d be shocked if few of their domains excluded Internet facing devices.

https://survey.stackoverflow.co/2023/#section-developer-roles-developer-type

But you’re right to point out development isn’t a monolith. Professionally though: anyone working in a field where cybersecurity is a concern should be thinking about and knowledgeable of cybersec.

I didn’t realize just how siloed my perspective may be haha, I appreciate the statistics. I’ll agree that cyber security is a concern in general, and honestly everyone I know in industry has at least a moderate knowledge of basic cyber security concepts. Even in embedded, processes are evolving for safety critical code.

I mean the classic is that you must be “really good at computers” like I’m okay at debugging, just by being methodical, but if you plop me in front of a Windows desktop and ask me to fix your printer; brother, I haven’t fucked with any of those 3 things in over a decade.

I would be as a baby, learning everything anew, to solve your problem.

fuck printers

fuck you. my uncle was a dot matrix.

That reminds me of one of those shit jokes from the eighties:

“There’s two new ladies in the typing pool who do a hundred times the work of anyone else.”

“What’re they called?”

“Daisy Wheel and Dot Matrix.”

Oh man love it. In the 80s, I used to go to my grandmother’s work after school. She was a stenographer at the neighborhood newspaper in Brooklyn. If she was alive she’d probably love this. My mom ran a copy room for a high school but I think it would go over her head.

Yeah I feel this. Fucking huge mechanical boxes of fucking shite that should he lobbed down the stairs and anyone who wants to print should be beaten with toner cartridges till they are black and blue, or cymk

Especially HP printers and honorable mention to Konica Minoltas.

I enjoy your comment so much because your methodical and patient approach to debugging code is exactly what’s required to fix a printer. You literally are really good at computers even if your aren’t armed with a lot of specific knowledge. It’s the absolutely worst because troubleshooting without knowledge and experience is painfully slow and the whole time I’m thinking"they know so much more about this than I do! If they’d just slow down and read what’s on the screen …" But many people struggle to do even basic troubleshooting. Their lack of what you have makes them inept.

I was gonna say, the OP here sounds perfectly good at computers. Most people either have so little knowledge they can’t even start on solving their printer problem no matter what, or don’t have the problem solving mindset needed to search for and try different things until they find the actual solution.

There’s a reason why specific knowledge beyond the basic concepts is rarely a hard requirement in software. The learning and problem solving abilities are way more important.

I can definitely solve their problems, but I’d have to go through all of the same research they would have to. They’re basically just being lazy and asking us to do their work for them.

I go to excuse now is “I haven’t used windows in 10 years”, when people call me for tech support.

I literally can’t help them lol

“I don’t know anything about your apple device, I prefer to own my devices and not have somone else dictate what I can use it for”

I think the difference is that they don’t know where to even start, and we clearly do and that’s the way to differentiate from perfectly working computer and a basically brick in their minds.

I use a car analogy for these situations: You need a mechanic (IT professional.) I’m an engineer (coder.) They’re both technically demanding jobs, but they use very different skillsets: IT pros, like mechanics, have to think laterally across a wide array of technology to pinpoint and solve vague problems, and they are very good at it because they do it often.

Software engineers are more like the guy that designed one part of the transmission on one very specific make of car. Can they solve the same problems as IT pros? Sure! But it’ll take them longer and the solution might be a little weird.

Can they solve the same problems as IT pros? Sure! But it’ll take them longer and the solution might be a little weird.

Well the person just wants a solution that works. They didn’t say it has to be the best solution of all solutions.

It’s funny how soon they realize they want a good one.

I work in service design and delivery. It’s my job to understand how devices actually function and interact. Can confirm that dev types can learn the stuff if they want to but most have not. Knowing how to set up your fancy computer with all the IDEs in the world is great but not the same as doing that for 5,000 people at once.

That they have any business telling me how complicated something is or how long something should take for me to implement.

Yeah like “Just add a simple button here”. Yeah of course, the button is not the difficult part.

Its like they think we just tell the computer what they asked us to do and we’re done.

I was coming here to talk about that recent post saying how easy it is to make a GUI and every program should already have one…

Command line is a GUI, change my mind

That it’s dry and boring and even I must hate it because there’s no place for creativity in a technical field.

The best programmers I know are all super creative. You can’t solve real world problems with the limited tools available to us without creativity.

I’ve been listening to stuff you missed in history class pod from the beginning and whenever something about computers, science or tech comes up they start being like hush hush don’t worry we won’t actually talk about it; as if the mere mention will scare away listeners

That the business idea, the design, the architecture, and code for the next multimillion dollar app is just sitting in my head waiting for the next guy with enough motivation to extract from me.