Oxford University this week shut down an academic institute run by one of Elon Musk’s favorite philosophers. The Future of Humanity Institute, dedicated to the long-termism movement and other Silicon Valley-endorsed ideas such as effective altruism, closed this week after 19 years of operation. Musk had donated £1m to the FIH in 2015 through a sister organization to research the threat of artificial intelligence. He had also boosted the ideas of its leader for nearly a decade on X, formerly Twitter.

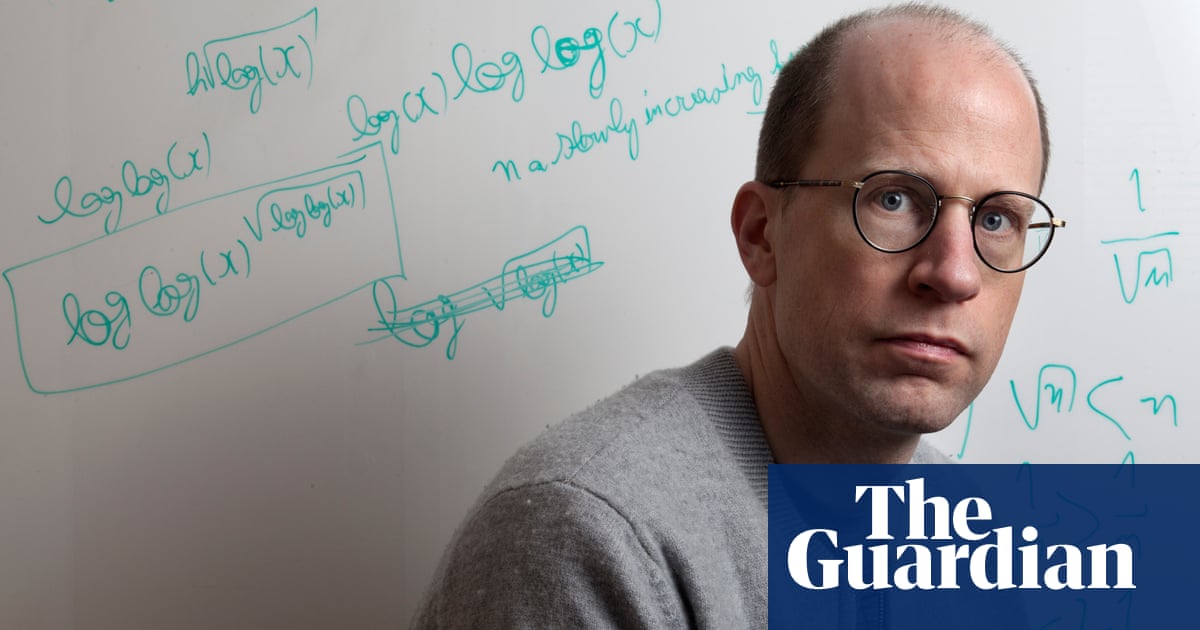

The center was run by Nick Bostrom, a Swedish-born philosopher whose writings about the long-term threat of AI replacing humanity turned him into a celebrity figure among the tech elite and routinely landed him on lists of top global thinkers. OpenAI chief executive Sam Altman, Microsoft founder Bill Gates and Tesla chief Musk all wrote blurbs for his 2014 bestselling book Superintelligence.

…

Bostrom resigned from Oxford following the institute’s closure, he told the Guardian.

The closure of Bostrom’s center is a further blow to the effective altruism and longtermism movements that the philosopher has spent decades championing, which in recent years have become mired in scandals related to racism, sexual harassment and financial fraud. Bostrom himself issued an apology last year after a decades-old email surfaced in which he claimed “Blacks are more stupid than whites” and used the N-word.

…

Effective altruism, the utilitarian belief that people should focus their lives and resources on maximizing the amount of global good they can do, has become a heavily promoted philosophy in recent years. The philosophers at the center of it, such as Oxford professor William MacAskill, also became the subject of immense amounts of news coverage and glossy magazine profiles. One of the movement’s biggest backers was Sam Bankman-Fried, the now-disgraced former billionaire who founded the FTX cryptocurrency exchange.

Bostrom is a proponent of the related longtermism movement, which held that humanity should concern itself mostly with long term existential threats to its existence such as AI and space travel. Critics of longtermism tend to argue that the movement applies an extreme calculus to the world that disregards tangible current problems, such as climate change and poverty, and veers into authoritarian ideas. In one paper, Bostrom proposed the concept of a universally worn “freedom tag” that would constantly surveil individuals using AI and relate any suspicious activity to a police force that could arrest them for threatening humanity.

…

The past few years have been tumultuous for effective altruism, however, as Bankman-Fried’s multibillion-dollar fraud marred the movement and spurred accusations that its leaders ignored warnings about his conduct. Concerns over effective altruism being used to whitewash the reputation of Bankman-Fried, and questions over what good effective altruist organizations are actually doing, proliferated in the years since his downfall.

Meanwhile, Bostrom’s email from the 1990s resurfaced last year and resulted in him issuing a statement repudiating his racist remarks and clarifying his views on subjects such as eugenics. Some of his answers – “Do I support eugenics? No, not as the term is commonly understood” – led to further criticism from fellow academics that he was being evasive.

Wow I didn’t know effective altruism was so bad. When someone explained me the basic idea some years ago, it sounded quite sensible. Along the lines of: “if you give a man a fish you will feed him for a day. If you teach a man how to fish, you will feed him for a lifetime.”

Like many things it’s a reasonable idea - I still think it’s a reasonable idea. It just tends to get adopted and warped by arseholes

It does on the surface, but the problem is it quickly devolves into extreme utilitarianism. There are some other issues such as:

The underlying idea is reasonable. But it was adopted by assholes and warped into something altogether different.