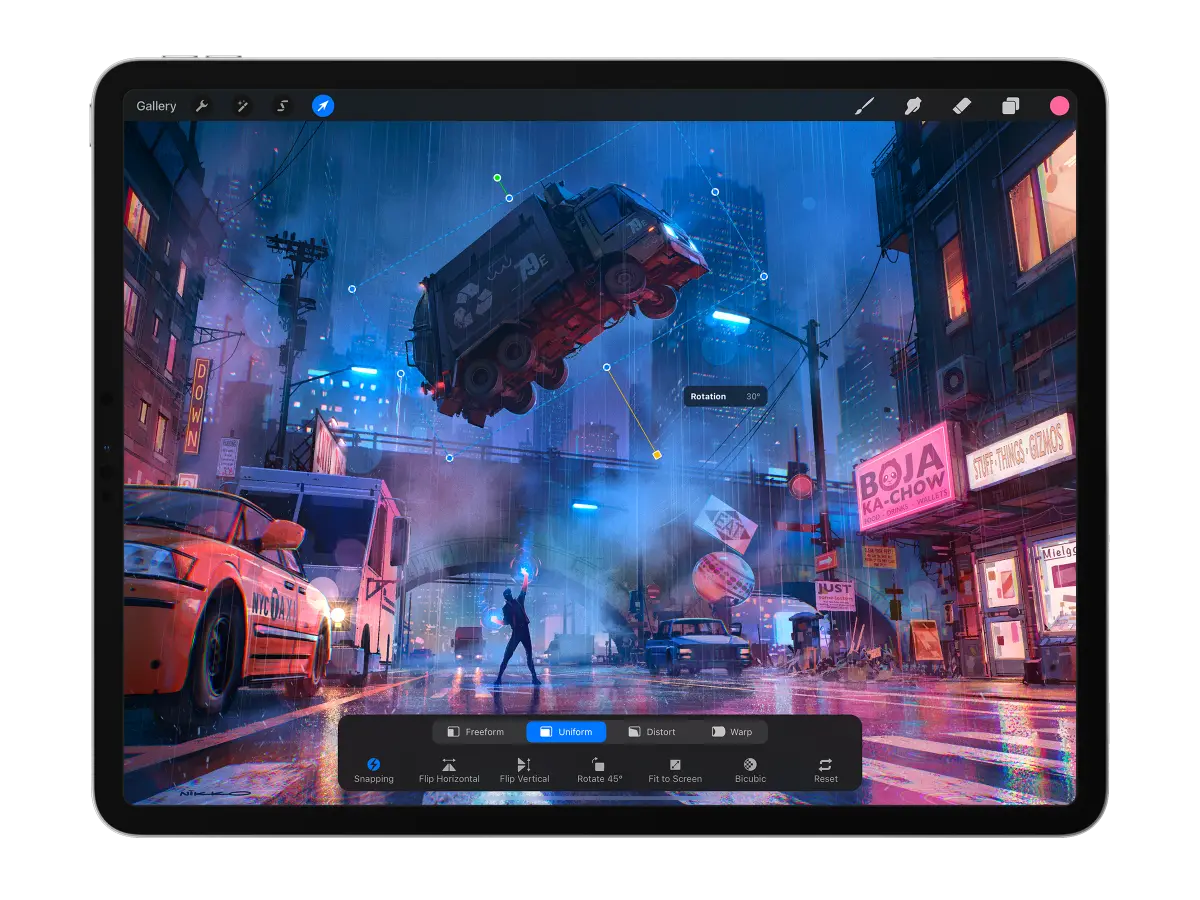

Popular iPad design app Procreate is coming out against generative AI, and has vowed never to introduce generative AI features into its products. The company said on its website that although machine learning is a “compelling technology with a lot of merit,” the current path that generative AI is on is wrong for its platform.

Procreate goes on to say that it’s not chasing a technology that is a threat to human creativity, even though this may make the company “seem at risk of being left behind.”

Procreate CEO James Cuda released an even stronger statement against the technology in a video posted to X on Monday.

CEO James Cuda

the irony

I don’t trust them. They better fire him and hire a Jim Abacus.

The CEO should ideally have the exact same name as the company. Like Tim Apple.

Or Sam Sung.

deleted by creator

Is that what he was saying in Mario 64? “So long, Gary Bowser!”

The more you buy, the more you save!

As with everything the problem is not AI technology the problem is capitalism.

End capitalism and suddenly being able to share and openly use everyone’s work for free becomes a beautiful thing.

I agree, but as long as we still have capitalism I support measures that at least slow down the destructiveness of capitalism. AI is like a new powertool in capitalism’s arsenal to dismantle our humanity. Sure we can use it for cool things as well. But right now it’s used mostly to automate stuff that makes us human - art, music and so on. Not useful stuff like loading the dishwasher for me. More like writing a letter for me to invite my friends to my birthday. Very cool. But maybe the work I put in doing this myself is making my friends feel appreciated?

Edit: It’s also nice to at least have an app that takes this maximalist approach. Then people can choose. If they’re half-assing it there will be more and more ai-features creeping in over time. One compromise after the next until it’s like all the other apps. It’s also important to have such a maximalist stand in order to gauge the scale in a way.

This, over and over again.

Going against AI is being a luddite, not aware of the core underlying issue.

I sort of agree. But…

… In a world of adequate distribution and a form of universal income, we should all relish automation.

That doesn’t preclude capitalism (investing for profit, the use of currency, interest rates etc), however, just needs a state with guts and capability to force redistribution.

What are the realistic and proven to work alternatives

No doubt his decision was helped by the fact that you can’t really fit full image generation AI on iPads - for example Stable Diffusion needs at the very least 6GB of GPU memory to work.

That said, since what they sell is a design app, I applaud him for siding with the interests of at least some of his users.

PS: Is it just me that finds it funny that the guy’s last name is “Cuda” and CUDA is the Nvidia technology for running computing on their GPUs and hence widelly used for this kind of AI?

They’re all run on cloud, for commercial products.

Good point, I had forgotten that :/

you can’t really fit full image generation AI on iPads - for example Stable Diffusion needs at the very least 6GB of GPU memory to work.

You can currently run Stable Diffusion and Flux on iPads and iPhones with the Draw Things app. Including LoRAs and TIs and ControlNet and a whole bunch of other options I’m too green to understand.

Technically the app even runs on relatively old devices, though I imagine only at lower resolutions and probably takes ages.

But in my limited experience it works quite well on an iPad Pro and an iPhone 13 Pro.

I want to be more creative with SD. Do you have any recommendations similar to https://github.com/intel/openvino-ai-plugins-gimp

Honestly most of what I’ve learned about how to use SD comes from seeing what other people have done and trying to tweak or adjust to get a feel for the tool and its various models. Spend some time on a site like CivitAI to both see what can be done and to find models. I’m very much a noob and cannot produce results nearly as impressive as a good chunk of what I find on there.

The most important thing I’ve learned is how much generative AI, especially SD, is just a tool. And people with more creativity and a better understanding of the tool use it better, just like every other tool.

I do like the idea of using it in GIMP as an answer to Adobe’s Firefly.

Procreate is amazing. I bought it for my neurodivergent daughter and used it as a non-destructive coloring book.

I’d grab a line drawing of a character that she wanted to color from a google image search, add it to the background layer, lock the background so she can’t accidentally move or erase it, then have her color on the layer above it using the multiply so the black lines can’t be painted over. She got the point where she prefers to have the colorized version alongside the black and white so she can grab the colors from the original and do fun stuff like mimic its shading and copy paste in elements that might have been too difficult for her to render. Honestly, she barely speaks but on that program, she’s better than most adults already even at age 8. Her work looks utterly perfect and she knows a lot of advanced blending and cloning stuff that traditional media artists don’t usually know.

That’s heartwarming. Good luck to her! (and you)

You’re a great techno-parent

Thanks. I try my best. 😊

I love Procreate very much buT WHY CAN NOT I MAKE A BÉZIER CURVE OR ADD TEXT ON MY IPAD PRO AAAA

Built on a foundation of theft

Sums up all AI

EDIT: meant all gen AI

Does it? I worked on training a classifier and a generative model on freely available galaxy images taken by Hubble and labelled in a citizen science approach. Where’s the theft?

Hard to say. Training models is generative; training a model from scratch is costly. Your input may not infringe copyright but the input before or after may have.

I trained the generative models all from scratch. Pretrained models are not that helpful when it’s important to accurately capture very domain specific features.

One of the classifiers I tried was based on zoobot with a custom head. Assuming the publications around zoobot are truthful, it was trained exclusively on similar data from a multitude of different sky surveys.

I assume you mean all generative AI? Because I don’t think AI that autonomously learns to play Super Mario is theft https://youtu.be/qv6UVOQ0F44

Nintendo probably thinks it’s theft lol

No, it sums up a very specific type of AI…

Blanket statement are dumb.

Can you explain how you came to that conclusion?

The way I understand it, generative AI training is more like a single person analyzing art at impossibly fast speeds, then using said art as inspiration to create new art at impossibly fast speeds.

The art isn’t being made btw so much as being copy and pasted in a way that might convince you it was new.

Since the AI cannot create a new style or genre on its own, without source material that already exists to train it, and that source material is often scraped up off of databases, often against the will and intent of the original creators, it is seen as theft.

Especially if the artists were in no way compensated.

To add to your excellent comment:

It does not ask if it can copy the art nor does it attribute its generated art with: “this art was inspired by …”

I can understand why creators unhappy with this situation.

Do you go into a gallery and scream “THIS ART WAS INSPIRED BY PICASSO. WHY DOESN’T IT SAY THAT! tHIS IS THEFT!” - no, I suspect you don’t because that would be stupid. That’s what you sound like here

This is absolutely wrong about how something like SD generates outputs. Relationships between atomic parts of an image are encoded into the model from across all training inputs. There is no copying and pasting. Now whether you think extracting these relationships from images you can otherwise access constitutes some sort of theft is one thing, but characterizing generative models as copying and pasting scraped image pieces is just utterly incorrect.

While, yes it is not copy and paste in the literal sense, it does still have the capacity to outright copy the style of an artist’s work that was used to train it.

If teaching another artist’s work is already frowned upon when trying to pass the trace off as one’s own work, then there’s little difference when a computer does it more convincingly.

Maybe a bit off tangent here, since I’m not even sure if this is strictly possible, but if a generative system was only trained off of, say, only Picasso’s work, would you be able to pass the outputs off as Picasso pieces? Or would they be considered the work of the person writing a prompt or built the AI? What if the artist wasn’t Picasso but someone still alive, would they get a cut of the profits?

The outputs would be considered no one’s outputs as no copyright is afforded to AI general content.

That feels like it’s rather besides the point, innit? You’ve got AI companies showing off AI art and saying “look at what this model can do,” you’ve got entire communities on Lemmy and Reddit dedicated to posting AI art, and they’re all going “look at what I made with this AI, I’m so good at prompt engineering” as though they did all the work, and the millions of hours spent actually creating the art used to train the model gets no mention at all, much less any compensation or permission for their works to be used in the training. Sure does seem like people are passing AI art off as their own, even if they’re not claiming copyright.

I’m not sure how it could be besides the point, though it may not be entirely dispositive. I take ownership to be a question of who has a controlling and exclusionary right to something–in this case thats copyright. Copyright allows you to license these things and extract money for their use. If there is no copyright, there is no secure monetization (something companies using AI generated materials absolutely keep high in mind). The question was “who would own it” and I think it’s pretty clear cut who would own it. No one.

With this logic photography is a painting, painted at an impossible high speed - but for some reasons we make a difference between something humans make and machines make.

Amusingly, every argument against ai art was made against photography over a hundred years ago, and I bet you own a camera - possibly even on the device you wrote your stupid comment on!

Sure, I even do photography professionally form time to time - I just don’t consider it to be a painting.

But art, right?

(edit for clarity: at least in some cases)

Photography can be art as well as AI generated images can be art as well. AI is a tool and people can create art with it. But also what is art is completely subjective to the viewer.

That’s a blanket statement. While I understand the sentiment, what about the thousands of “AIs” trained on private, proprietary data for personal or private use by organizations that own the said data. It’s the not the technology but the lack of regulation and misaligned incentives.

Nope. Stop with the luddite lies please

While a honorable move, “never” doesn’t exist in a world based on quarterly financials…

Generative AI steals art.

Procreate’s customers are artists.

Stands to reason you don’t piss your customer base off.

Generative AI steals art.

No it doesn’t. Drop that repeated lie please

You are right, generally, generative AI pirates art and the rest of the content on the internet.

How do you know AI companies straight-up pirate the art and don’t just buy a copy to train their models?

That is at least borderline more correct, but it’s still wrong. It learns using a neural network much like, but much simpler than, the one in your head

It doesn’t “learn” anything, its a database with linear algebra. Using anthropomorphic adjectives only helps to entrench this useless and wasteful technology to regular people.

Trying to redefine the word “learn” won;t help your cause either. Stop being a luddite and realise that it is neither useless not wasteful

Everyone loves increased work load and wow that’s a lot of power to… do what exactly.

You don’t need to resort to name calling, you could make a compelling argument instead…

I’m calling you a luddite because you’re being a luddite. AI is just a new medium, that’s all it is, you’re just scared of new technology just like how idiots were scared of photography a hundred and some years ago. You do not have an argument that holds any water because they were all made against photography, and many of them against pre-mixed paints before that!

Also I’m done arguing with anti-ai luddites because you are about as intractable as trump cultists. I’ll respond to a level or two of comments in good faith because someone else might see your nonsense and believe it but this deep it’s most likely you and me, and you’re not gonna be convinced of anything.

Stop being a luddite

Is it really not true? How many companies have been training their models using art straight out of the Internet while completely disregarding their creative licences or asking anyone for permission? How many times haven’t people got a result from a GenAI model that broke IP rights, or looked extremely similar to an already existing piece of art, and would probably get people sued? And how many of these models have been made available for commercial purposes?

The only logical conclusion is that GenAI steals art because it has been constantly “fed” with stolen art.

It does not steal art. It does not store copies of art, it does not deprive anyone of their pictures, it does not remix other people’s pictures, it does not recreate other people’s pictures unless very very specifically directed to do so (and that’'s on the human not he AI), and even then it usually gets things “wrong”. If you don’t completely redefine theft then it does not steal art

You don’t need permission to train a model on any art. No IP rights are being broken.

I think that’s a very broad statement. If you need a license to reproduce a piece of art then you need a license in order to feed it into an AI surely. itd the same thing, it’s training the AI to create works similar to that. At the very least they should compensate you for training their AI for them.

Why do you think it ingests all its content from. Problem isn’t the AI itself it’s the companies that operated but it’s not inaccurate to conflate the two things.

I think you’ll be in a little disingenuous.

I like how you completely dodge his argument with this. If training data isn’t considered transformative, then it’s copyright infringement, like piracy.

Yes I agree it’s copyright violation I think maybe you’re not reading my comment correctly? I’m responding to the guy saying that it isn’t copyright violation.

What are you talking about? Did you understand the original comment?

Your initial claim was that MLMs “steal” content to train on, which is plainly false. If MLM training data is theft, then piracy is theft. All this hate should be directed at the legal system that punishes individuals for piracy while enabling corporations to do the same.

You need to take some pills or something because you’re swinging from two positions at the same time. You have a go at me because you think I’m saying copyright violation is acceptable and now you’re having to go at me because I corrected you and said I think it’s unacceptable.

Please decide what you actually think before commenting

You’re being disingenuous by trying to redefine the concept of theft. It does not steal anything by any definition of the word. It learn using a neural network similar to, but much simpler than, the one in your head

Thefts is defined as in law. If something is stolen I.e it is not compensated for, then it is theft. You can’t get around it by going “oh well technically it’s transformative by a non-human intelligence” that doesn’t work. The law not recognize AI systems as being intelligent entities, so they are therefore not capable of transformative work.

This isn’t a matter of personal opinion it’s just what the law is. You can’t argue about it.

I’m impressed you’ve managed to go from “wrong” to “not even wrong” - that is so far from correct that you can’t even conceive of the right answer. Stop being a luddite

How about “it’s complicated”? It certainly doesn’t steal art and it certainly does lower the need for humans to create art.

Honestly the need for art has nothing to do with the urge to create art. People will create art no matter what and capitalism treats them like shit for it but that;s a totally different argument

Lmao keep telling yourself that

Sure. I’ll keep telling others that too because I’m right

deleted by creator

They’re chasing profit too, though. “Taking a stand” means they’re advertising, trying to differentiate themselves from their competitors and draw in people who hold anti-AI views.

That will last until that segment of users becomes too small to be worth trying to base their business on.

Well, sounds great. I almost wish more companies would advertise to that market, really.

It’s like… I know you’re lying, and I know you probably don’t actually care, but some of your competitors couldn’t even be bothered to do this much. Those companies thought shitting on things I care about to maximize profit was the better strategy. I’ll take that into consideration in my future decisions.

And if the situation changes, if they turn around and go full in on generative AI, we’ll just have to consider that too. That’s life.

Of course, I believe using alternatives that are more resistant to these kinds of market trends (community built software, perhaps?) would be ideal, but it’s not always an option.

They specifically called out generative AI though. Stuff like separating photographs to individual pieces doesn’t require generative AI specifically. Machine learning models that fall into the general umbrella of AI already exist for object segmentation.

Never eh? Well someone won’t exist under the same name/promise in decade or two.

That stance will change if they ever get acquired. Might even get the chance to see James Cuda try and walk back this stance in a few years.

So definitely gonna have AI baked in by next year.

Very good news for artists. AI image generation is founded upon art theft, and art theft is something that artists are not fond of, so it’s really nice to see the developer being open about his respect to the artists who use the app!

Ironically, I think AI may prove to be most useful in video games.

Not to outright replace writers, but so they instead focus on feeding backstory to AI so it essentially becomes the characters they’ve created.

I just think it’s going to be inevitable and the only possible option for a game where the player truly chooses the story.

I just can’t be interested in multiple choice games where you know that your choice doesn’t matter. If a character dies from option a, then option b, c, and d kill them as well.

Realising that as a kid instantly ruined telltale games for me, but I think AI used in the right way could solve that problem, to at least some degree.

deleted by creator

I’ve no idea where you’re getting these predictions from. I think some of them are fundamentally flawed, if not outright incorrect, and don’t reflect real life trends of generative AI development and applications.

Gonna finish this comment in a few, please wait. Edit: there we go.

One by one, somewhat sorted from “Ok, I see it,” to “What the hell?”

Wall of text

Generative AI is going to result in a hell of a lot of layoffs and will likely ruin people’s lives.

It’s arguably already ruining many artists’ lives, yeah. I haven’t seen any confirmed mass layoffs in the game industry due to AI just yet. Some articles claimed that Rayark, developer of Deemo and Cytus, fired many of its artists, but they later denied this.

AI is going to revolutionize the game industry.

Maybe. If you’re talking AI in general, it’s already been doing so for a long time. Generative AI? Not more so than most other industries, and that’s less than you’d expect.

AI is going to kill the game industry as it currently exists

I doubt such dramatic statements will turn true in time, unless you’re very generous with how openly they can be interpreted.

Generative AI will lead to a lot of real-time effects and mechanics that are currently impossible, like endless quests that don’t feel hollow, realistic procedural generation that can convincingly create everything from random clutter to entire galaxies, true photorealistic graphics (look up gaussian splatting, it’s pretty cool), convincing real-time art filters (imagine a 3d game that looks like an animated Van Gogh painting), and so on.

There’s a bit to unpack, here.

- With better hardware and more efficient models, I can see more generative AI being used for effects and mechanics, but I don’t think we’re seeing revolutionary uses anytime soon.

- While time could change this, model generation doesn’t seem too promising compared to just paying good 3D artists. That said, they don’t need to be perfect, good enough models would already be game (ha ha) changing.

- Endless quests that don’t feel hollow… might be entirely beyond current generative AI technologies. Depends on what you mean by hollow.

Generative AI will eventually open the door to small groups of devs being able to compete with AAA releases on all metrics.

That’s quite the bold statement. On some aspects, I’d be willing to hear you out, but on all metrics? That’s no longer a problem of mere technology or scale, it’s a matter of how many resources each one has available. Some gaps cannot be bridged, even by miraculous tech. For example, indies do not have the budget to license expensive actors (e.g. Call of Duty, Cyberpunk 2077), brands (e.g. racing games), and so on. GenAI will not change this. Hell, GenAI will certainly not pay for global advertising.

Generative AI will make studios with thousands of employees obsolete. This is a double-edged sword. Fewer employees means fewer ideas; but on the other side, you get a more accurate vision of what the director originally intended. Fewer employees also will also mean that you will likely have to be a genuinely creative person to get ahead, instead of someone who knows how to use Maya or Photoshop but is otherwise creatively bankrupt. Your contribution matters far more in a studio of <50 than it does in a studio of >5,000; as such, your creative skill will matter more.

Whoa, whoa, slow down, please.

Generative AI will make studios with thousands of employees obsolete.

Generative AI is failing to deliver significant gains to most industries. This article does a wonderful job of showing that GenAI is actually quite limited in its applications, and its benefits much smaller than a lot of people think. More importantly, it highlights how the market itself is widely starting to grasp this.

Fewer employees means fewer ideas; but on the other side, you get a more accurate vision of what the director originally intended.

Game development can’t be simplified like this! Famously, the designers and artists for genre-defining game Dark Souls were given a lot of freedom in production at the request of director Hidetaka Miyazaki himself. Regardless of what you think of the results, including the diversity of other’s visions… was the director’s vision!

Fewer employees also will also mean that you will likely have to be a genuinely creative person to get ahead, instead of someone who knows how to use Maya or Photoshop but is otherwise creatively bankrupt. Your contribution matters far more in a studio of <50 than it does in a studio of >5,000; as such, your creative skill will matter more.

Again, that’s assuming a lot and simplifying too much. I know companies that reduced their employee count, where what happened instead is that those capable of playing office politics remained, while workers who just diligently did their part got the boot. I’m not saying that’s what always happens! But none of us can accurately predict exactly how large organizations will behave solely based on employee count.

A lot of people will have to be retrained because they will no longer be creative enough to make a living off of making games.

I admit, this is just a nitpick, but I don’t like the way this is phrased. Designers still have their wisdom, artists are still creative, workers remain skilled. If hiring them is no longer advantageous due to financial incentives to adopt AI, that’s not their fault for being insufficiently creative.

deleted by creator

I think the big difference is that you seem to think that AI has peaked or is near its peak potential, while I think AI is still just getting started.

That’s a fair assessment. I’m still not sure if popular AI tech is on an exponential or a sigmoid curve, though I tend towards the latter. But the industry at large is starting to believe it’s just not worth it. Worse, the entities at the forefront of AI are unsustainable—they’re burning brightly right now, but the cash flow required to keep a reaction on this scale going is simply too large. If you’ve got time and are willing, please check the linked article by Ed (burst damage).

I mean, maybe I could have phrased it better, but what else are you gonna do?

My bad, I try to trim down the fat while editing, but I accidentally removed things I shouldn’t. As I said, it’s a nitpick, and I understand the importance of helping those who find themselves unhirable. Maybe it’s just me, but I thought it came across a little mean, even if it wasn’t your intent. I try to gently “poke” folks when I see stuff like this because artists get enough undeserved crap already.

deleted by creator

What evidence is there that gen AI hasn’t peaked? They’ve already scraped most of the public Internet to get what we have right now, what else is there to feed it? The AI companies are also running out of time–VCs are only willing to throw money at them for so long, and given the rate of expenditure on AI so far outpaces pretty much every other major project in human history, they’re going to want a return on investment sooner rather than later. If they were making significant progress on a model that could do the things you were saying, they would be talking about it so that they could buy time and funding from VCs. Instead, we’re getting vague platitudes about “AGI” and meaningless AI sentience charts.

Yeah, ultimately a lof of devs are trying to make “story generators” relying on the user’s imagination to fill in the blanks, hence rimworld is so popular.

There’s a business/technical model where “local” llms would kinda work for this too, if you set it up like the Kobold Horde. So the dev hosts a few GPU instances for GPUs that can’t handle the local LLM, but users with beefy PCs also generate responses for other users (optionally, with a low priority) in a self hosted horde.

Something like using a LLM to make actually unique side quests in a Skyrim-esque game could be interesting.

The side quest/bounty quest shit in something like Starfield was fucking awful because it was like, 5 of the same damn things. Something capable of making at least unique sounding quests would be a shockingly good use of the tech.

Didn’t krita say the same thing at one time?

It’s currently one of the best programs to generate AI art using self hosted models.

deleted by creator

You can do that?

Generate images with self hosted models, or integrate it with art programs? Because yes to both.

I mean, ok, it’s not like anyone using Procreate is going to use AI generation in it anyway…